Photo credit: Clay Banks via Unsplash

When configuring a Load Balancer in OCI the default behavior is to pass all traffic through to your backend servers. We do this because it keeps things simple and gets you up and running quickly.

But best practice for this sort of thing is to send only the kinds of traffic that you’re actually expecting to the backend servers. In other words you filter IN the traffic you want and drop everything else. This is as opposed to filtering OUT potentially bad traffic.

There are a bunch of reasons this is the best practice but the two bigs ones are that:

- it avoids wasting compute resources on garbage inputs

- it is literally the smallest thing you can do to help prevent as yet unknown attacks against your servers.

In my little sample case here I want to effect two rules:

- the URL path must start with the something we’re expecting – in my case that’s /myapp/

- the request must use an HTTP Verb I expect – specifically HEAD, GET, or POST

The steps to enforce these rules are actually pretty simple. And they also happen to cover a good set of features of the Load Balancer. So this is a perfect use case to learn about what the OCI Load Balancer can really do!

Step 1: Create your Load Balancer

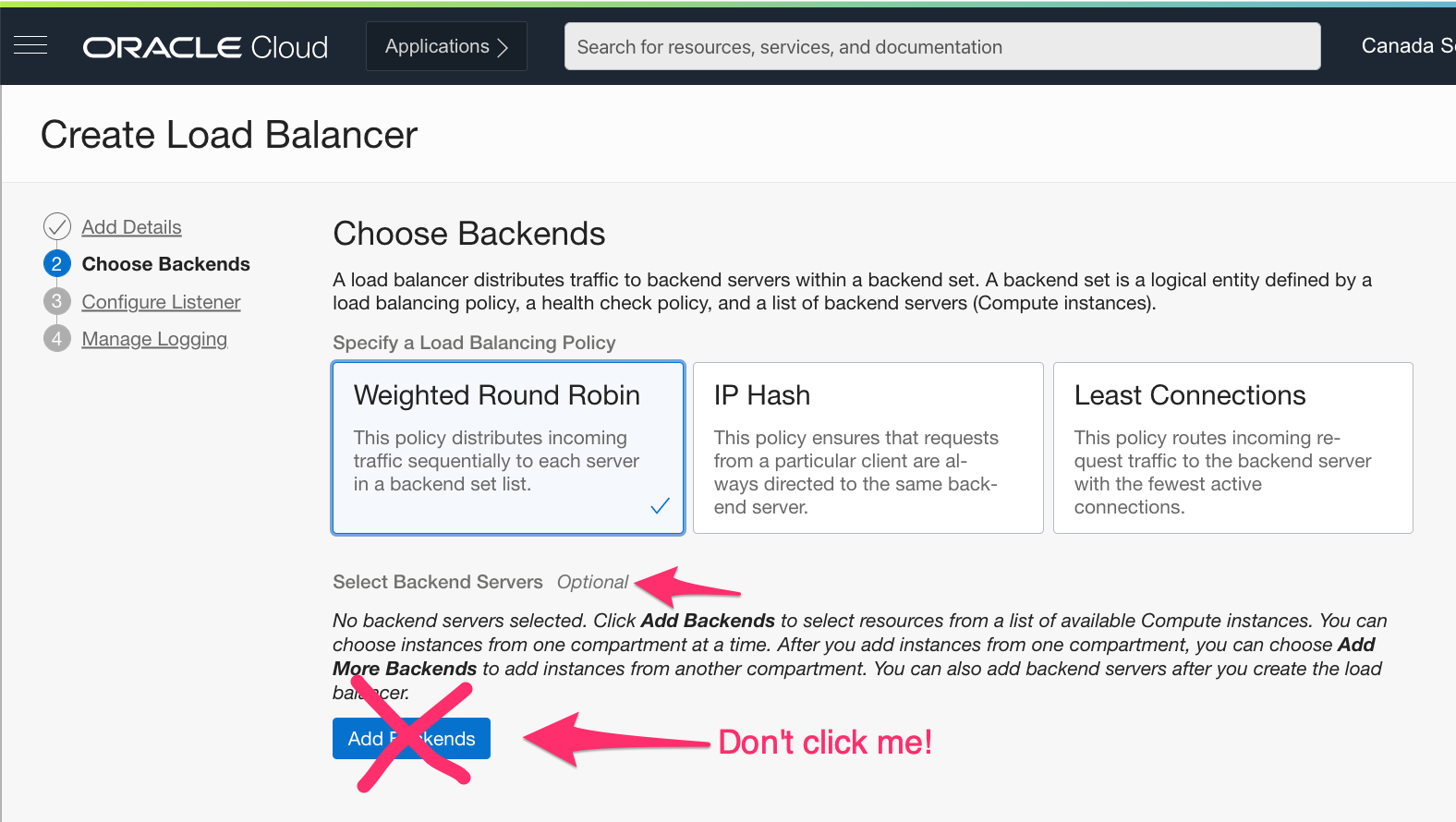

Open your OCI console and navigate to the Load Balancer. Click the Create button and fill in the name and the other required fields.

On this screen, you can see that you don’t actually have to select Back Ends for the load balance. We’re going to take advantage of that fact.

When you do this OCI will automatically create a backend set for you with no servers. This is exactly what we want – we’re going to ignore this backend set altogether!

Go ahead and “Next” your way through the screens – selecting appropriate values for your listener (HTTPS vs HTTP, etc) the listening port, and all the other options.

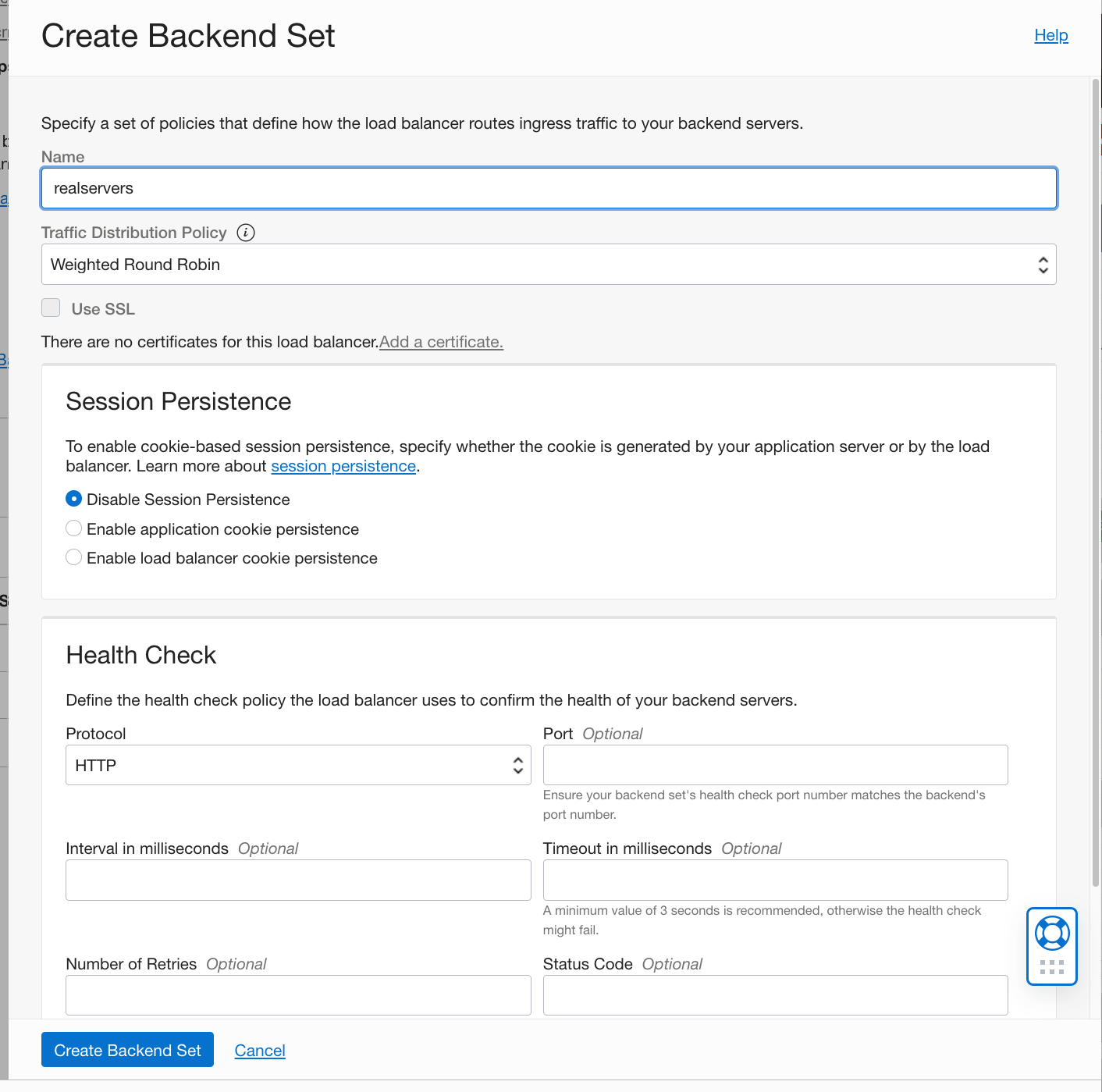

Step 2: create another backend set

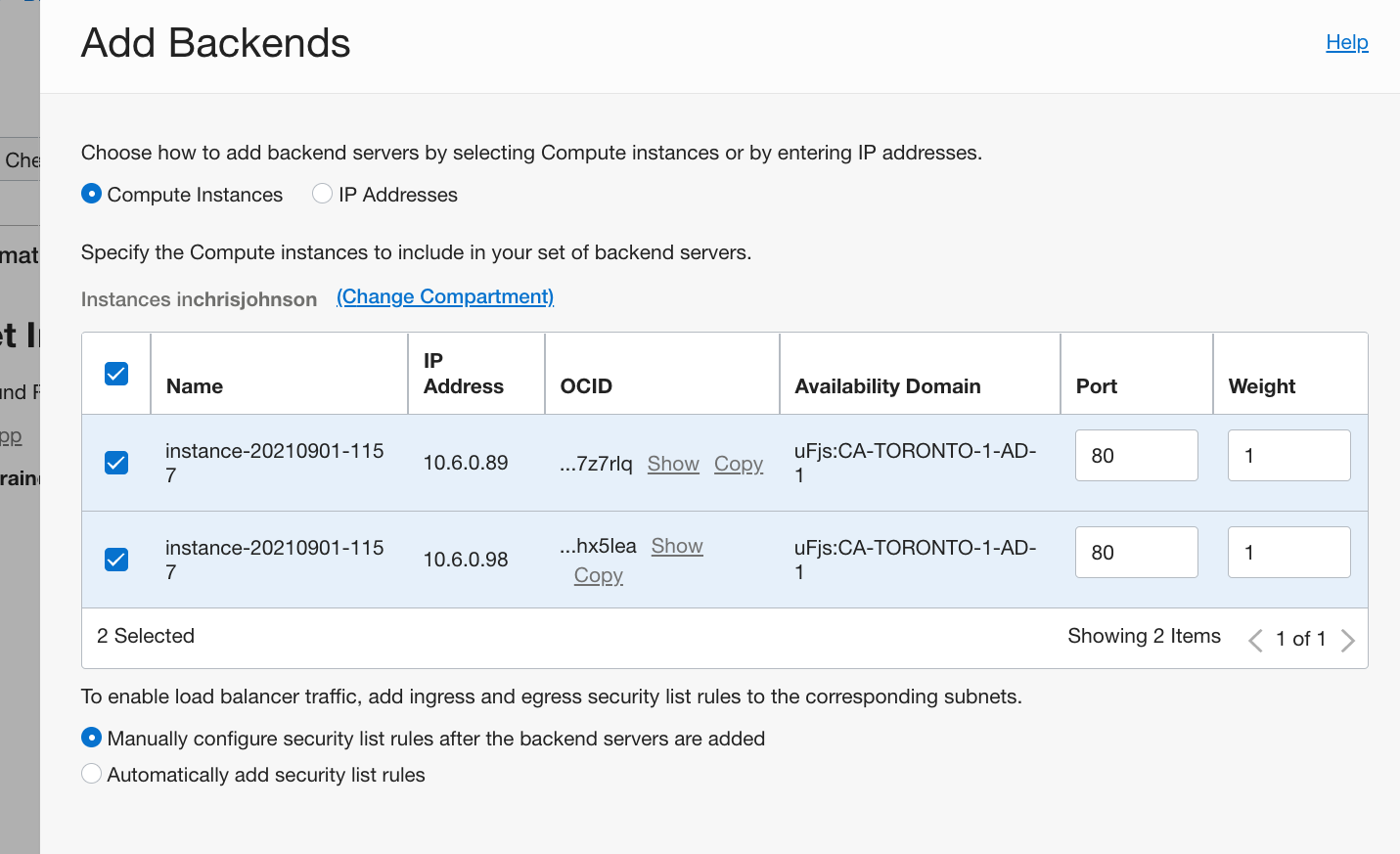

Once your Load Balancer has been created (which should take just a moment) click on the Backend Set navigation option and then click the Create button. We need a backend set to reach our actual web servers so give it a good name (e.g. “realservers”) and make any other selections for load balancing, health checks, etc. that you need. At a minimum that means you need to enter a Health Check URL but other options might be needed in your environment.

Finally, open that new Backend Set and select the web servers you want to use from the GUI, or enter their IP addresses. In my case I already have Security Lists open so I opted to ignore that setting.

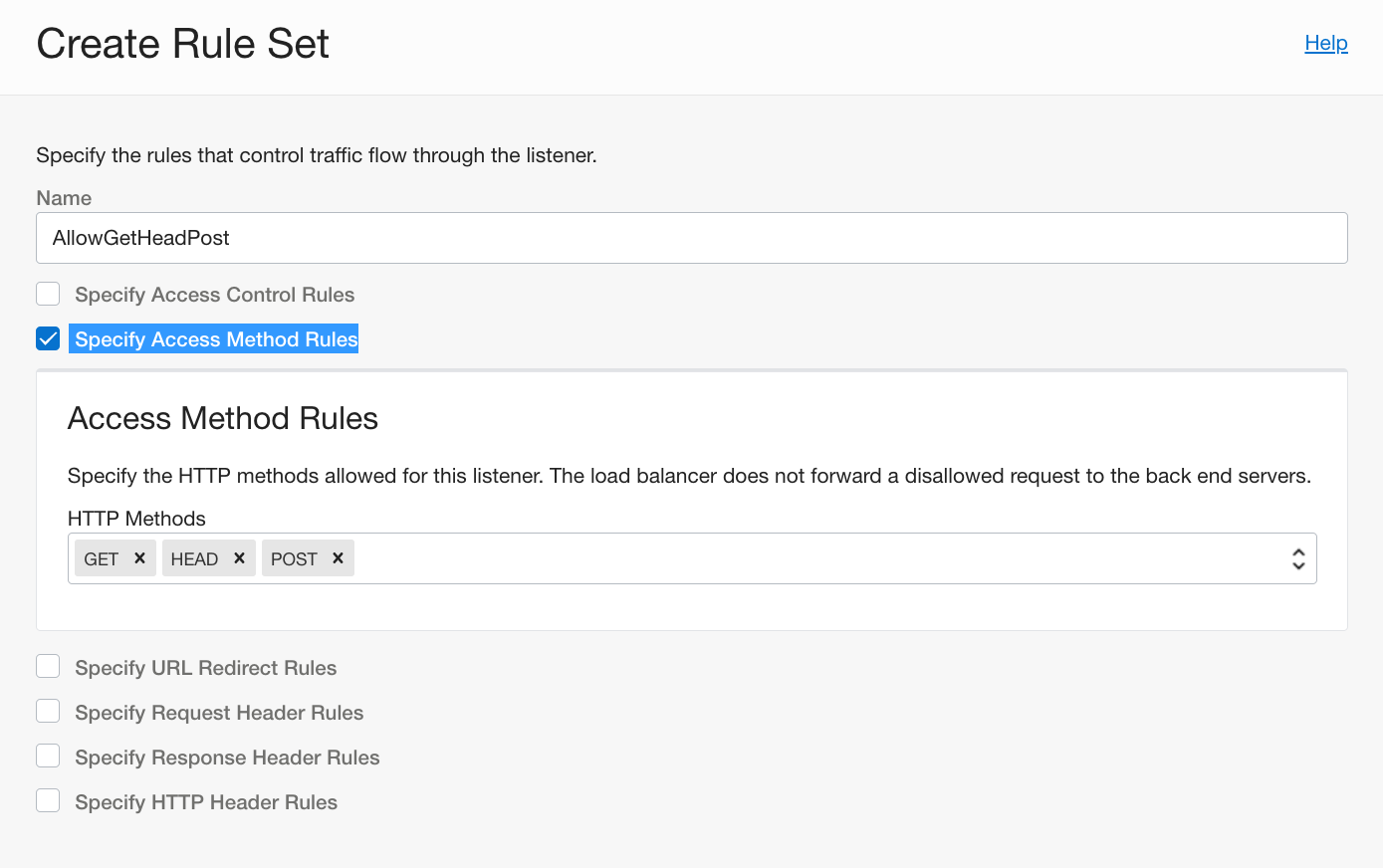

Step 3: Create a Rule Set

A Rule Set allows you to apply some simple controls to the load balancer. We’re going to use that to only allow GET, HEAD, and POST to our application.

Click on the Rule Set navigation and then click the Create button. On the resulting screen choose “Specify Access Method Rules” and then type in and pick HEAD, GET, and POST. These three verbs represent the very simplest actions HTTP can do. If you don’t know HTTP well what are you reading this blog post for? But OK – HEAD lets a client check on a file (to get its size, the last time it was modified, and some other stuff), GET retrieves the file, and POST allows you to submit a form.

Your result should look like this:

Go ahead and click Create.

Step 4: create a Routing Policy

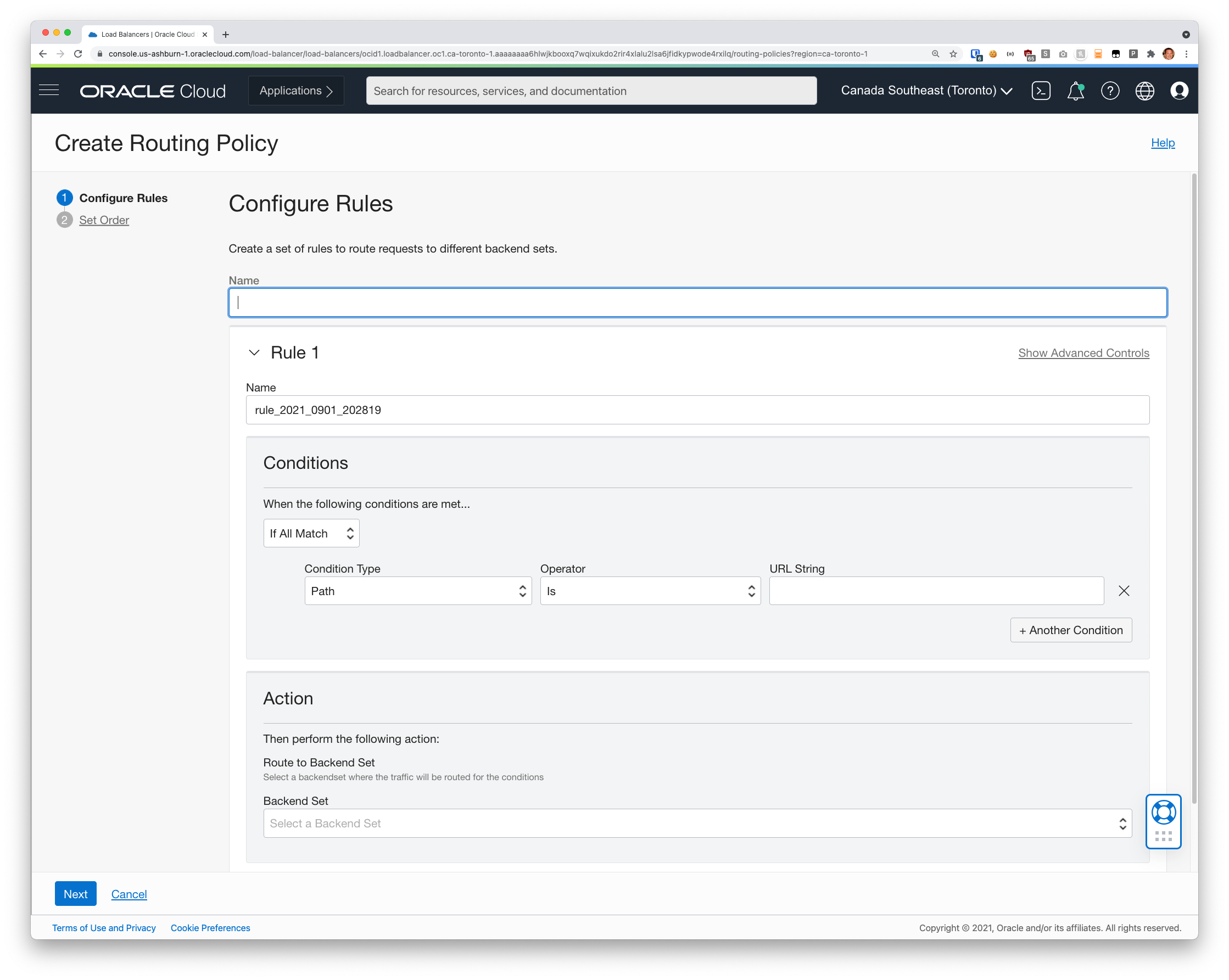

Click back on the Load Balancer details and navigate to the Routing Policy option. Click the Create button in there and you should see something like this:

There is a bit of power hidden on this screen, but all we need to do is enter a name, pick “Starts With” as the Operator, and enter “/myapp/” in the URL String box. And then finally choose “realservers” from the Backend Set selection box.

Hit Next and then the Create button and we’re done with our Routing Policy.

Step 5: Attach this Rule Set and Routing Policy to the listener

Now that we have created all the pieces parts we need we can move on to putting them all together – meaning we tell the Load Balancer that it should use the Rule Set and Routing Policy we just created for any requests that come into this Listener.

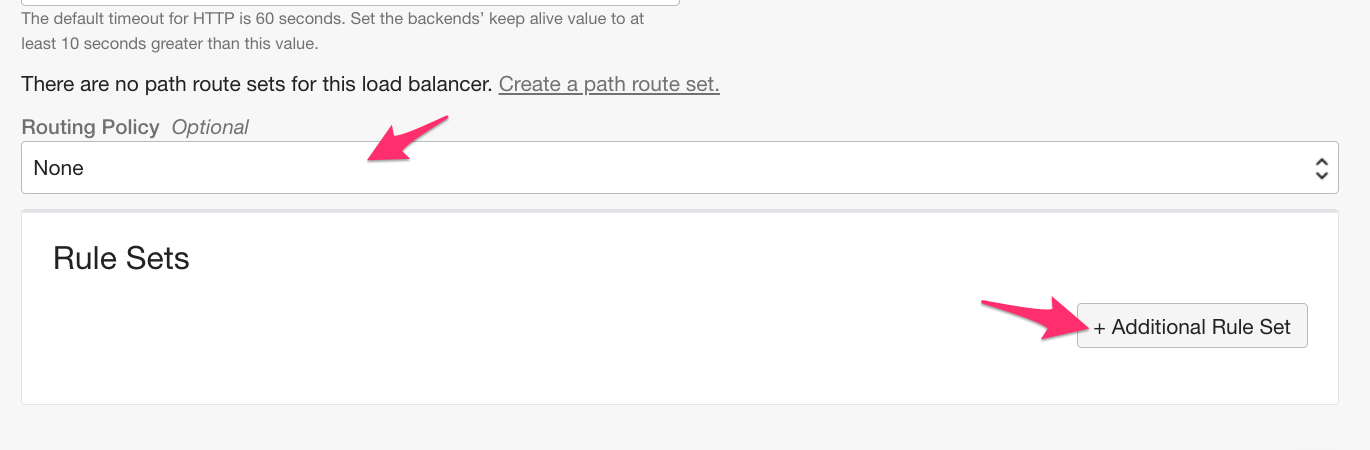

To do that, on the left navigation bar select Listeners and then edit the listener that got created when you created the Load Balancer in the first place.

Select the Routing Policy from the drop down and click the Additional Rule Set button.

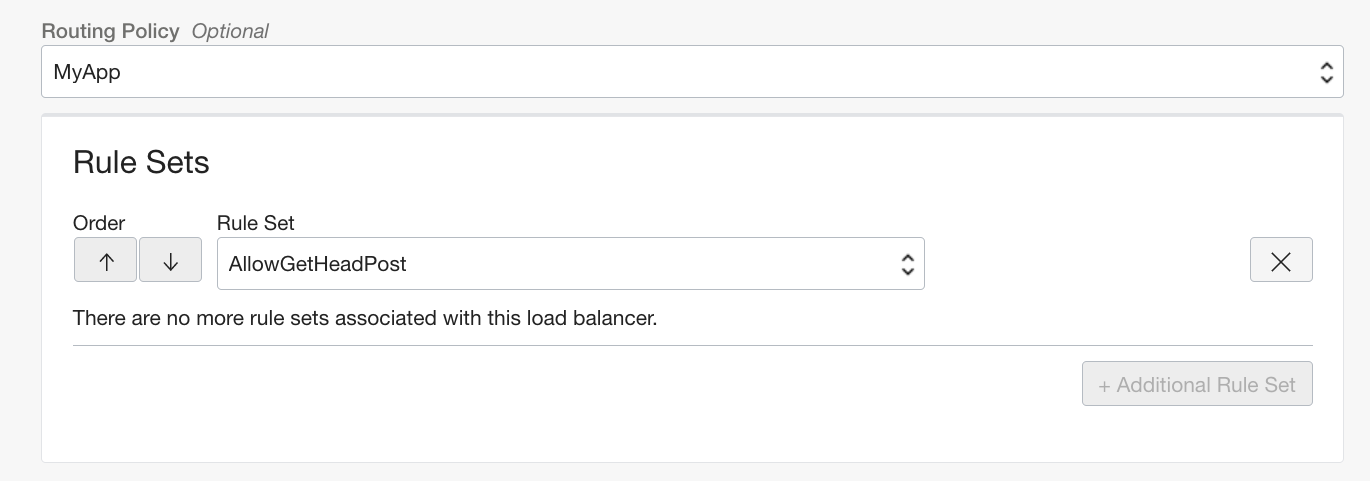

We only have one Routing Policy and one Rule Set, so the selections should be easy. The result should look something like this:

That’s it. Hit save and give the Load Balancer a moment to apply your changes.

Step 6: Test it

You don’t need me to tell you how to do this, but open a new tab or window and try to access your Load Balancer public IP address or name.

If you did everything right, when you access the Load Balancer IP directly you should get a 502 which is a kind of server-side error. The Load Balancer is sending this error because the Routing Policy didn’t say to send it to “realservers” and the default Backend Set doesn’t have any servers in it. So the Load Balancer basically shrugs its shoulders and says “I don’t know what I’m supposed to do with this so ERROR!”. It’s harmless to your real clients and doesn’t send anything to your real backends.

Then try accessing your application at /myapp/. If you did everything correctly you should see your app appear.

Step 7: What else?

There’s only a couple of other things you might want to do. I’ll leave them up to you, the reader, but here’s what else to think about:

- If you are using Traffic Steering, a WAF, or a CDN in front of the application you might need to return 200 on some other URLs

- There are some other URLs that you might want to allow. For example favicon.ico and robots.txt

You should consider enabling the Load Balancer’s logs to see what URL patterns you see coming in. And then evaluate tweaking your Routing Rules to allow some other paths.

Summary

Congratulations! I don’t want to say that you’ve done the bare minimum to protect your web application from attackers, but that’s basically what you’ve done.

The OCI Load Balancer will now prevent anyone from sending a request that doesn’t at least kinda sorta appear not dissimilar to something your application might want to receive and process!

Come back in a few weeks and I’ll revisit this post to show you what else you can do to make this even better..