Introduction

Generative AI refers to deep-learning models that can generate text, images, and other content based on the data they were trained on. It can perform assisted authoring, summarization, recommendations, etc. Generative AI can augment business applications say by, automatically generating a draft job or product description, or concisely summarizing an article to answer a customer support inquiry.

In this blog, we will explore using OCI Generative AI to develop a Large Language Model (LLM) powered application. This application uses dynamic prompts, which are user or program inputs provided to an LLM. Unlike hardcoded prompts, dynamic prompts are generated on the fly, incorporating user input, non-static sources like API calls, and a fixed template string. We will use LangChain for dynamic prompts. Langchain facilitates seamless interactions with language models, supporting various features like prompt templates, prompt chains, output parsers, and integration of various components and resources such as APIs and databases. Do explore other Langchain features to make robust LLM applications.

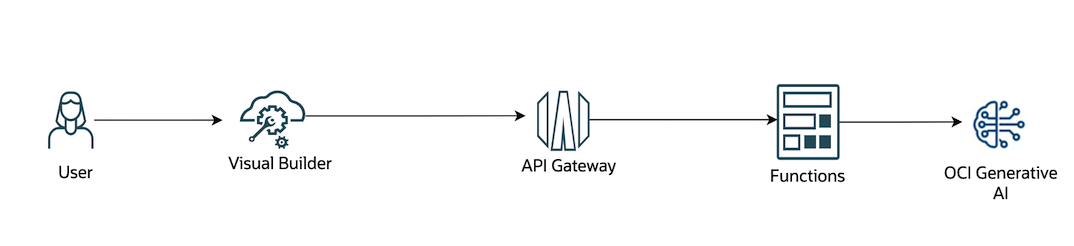

Architecture

Let’s consider a practical use case: creating an application that generates job descriptions based on dynamic inputs such as skills, roles, years of experience, and job responsibilities. The following diagram depicts the reference architecture.

This architecture uses the following components,

OCI Generative AI is a fully managed service that provides customizable large language models (LLMs). You can use the playground to try out the ready-to-use pre-trained models, or create and host your own fine-tuned custom models based on your data on dedicated AI clusters.

OCI Functions is a fully managed, multi-tenant, highly scalable, on-demand, Functions-as-a-Service platform.

OCI API Gateway enables you to publish APIs with private endpoints that are accessible from within your network, and which you can expose with public IP addresses if you want them to accept internet traffic.

Visual Builder is a browser-based application development tool that lets you create and deploy web, mobile, and progressive web interfaces.

Steps

Note that you need to add necessary IAM policies for various OCI services to work together.

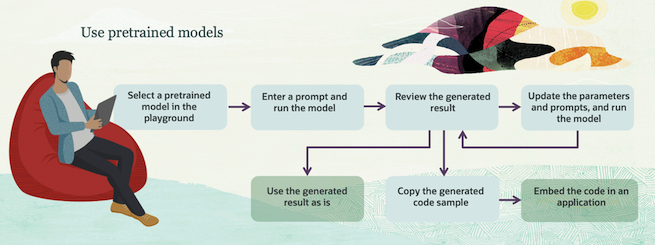

1. OCI Generative AI provides a set of pre-trained foundational models. You can select the foundational model in the playground to test and refine prompts and parameters. Once satisfied with the results copy the generated code in the playground to embed in your application. You can try static prompts in the playground and change them later to dynamic prompts in your code.

Following is a depiction of using the playground to test your prompts and get the sample code.

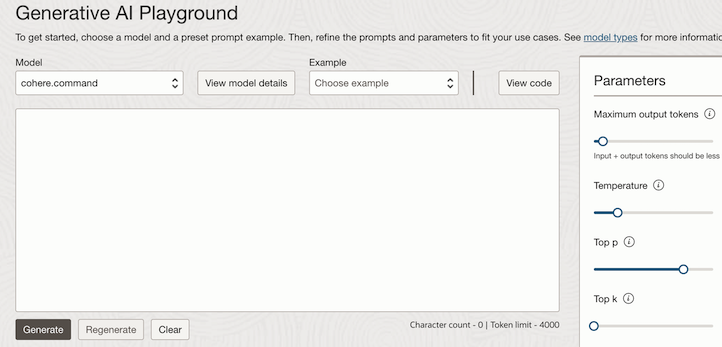

To illustrate, the OCI Generative AI playground, as shown in the image below, allows users to select a model and configure parameters for their prompts. The option’ View Code’ lets you view the autogenerated code for executing the prompt using OCI Generative AI SDKs.

2. The next step is to create an OCI Function that uses OCI Generative AI auto-generated code obtained from the playground.

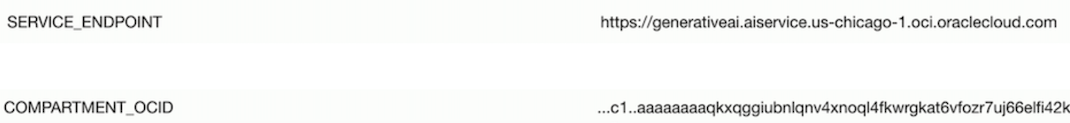

To start with, create an OCI Function Application and set Application configuration parameters, service endpoint of OCI Generative AI, and OCID of the Generative AI compartment. These parameters will be used within the Function code.

Next create a python OCI Function,fn_genai_jobdescription.

func.yaml

schema_version: 20180708 name: fn_genai_jobdescription version: 0.0.251 runtime: python build_image: fnproject/python:3.8-dev run_image: fnproject/python:3.8 entrypoint: /python/bin/fdk /function/func.py handler memory: 2048

The content of the func.py is shown in the below sections.

Import the necessary modules

import io import json import logging import os import oci.auth.signers import oci.generative_ai from langchain.prompts import PromptTemplate from fdk import response

Read the service endpoint and OCI Generative AI compartment OCID from Function Application parameters. Set the signer resource principals and set the GenerativeAiClient.

try:

endpoint = os.getenv("SERVICE_ENDPOINT")

compartment_ocid = os.getenv("COMPARTMENT_OCID")

if not endpoint:

raise ValueError("ERROR: Missing configuration key SERVICE_ENDPOINT")

if not compartment_ocid:

raise ValueError("ERROR: Missing configuration key COMPARTMENT_OCID")

signer = oci.auth.signers.get_resource_principals_signer()

generative_ai_client = oci.generative_ai.GenerativeAiClient(config={}, service_endpoint=endpoint, signer=signer,

retry_strategy=oci.retry.NoneRetryStrategy())

except Exception as e:

logging.getLogger().error(e)

raise

Use Langchain prompt templates to construct a dynamic prompt. For simplicity, here we assume that the user will enter all prompt variables. The options to set the dynamic variables are several, say the input can be a REST API response, a database SQL call, etc.

Initialize the oci.generative_ai.models.GenerateTextDetails() with prompt, LLM parameters, etc and call the generate_text() method to generate the text output.

input variables to the prompt template are skills, role, experience, and qualifications.

def generate_job_description(skills, role, experience, qualifications,generative_ai_client):

prompt = PromptTemplate(

input_variables=["skills", "role", "experience","qualifications"],

template="Generate a job description with skills {skills} for a role {role} having experience of {experience}. The qualifications expected from the candidate are {qualifications}"

)

generate_text_detail = oci.generative_ai.models.GenerateTextDetails()

prompts = [prompt.format(skills=skills, role=role, experience=experience,qualifications=qualifications)]

generate_text_detail.prompts = prompts

generate_text_detail.serving_mode = oci.generative_ai.models.OnDemandServingMode(model_id="cohere.command")

generate_text_detail.compartment_id = compartment_ocid

generate_text_detail.max_tokens = 300

generate_text_detail.temperature = 0.7

generate_text_detail.frequency_penalty = 0

generate_text_detail.top_p = 0.75

try:

generate_text_response = generative_ai_client.generate_text(generate_text_detail)

text_response = json.loads(str(generate_text_response.data))

return text_response["generated_texts"][0][0]["text"]

except Exception as e:

logging.getLogger().error(e)

raise

Finally, define the Function handler() method.

def handler(ctx, data: io.BytesIO = None):

try:

body = json.loads(data.getvalue())

skills = body["skills"]

role = body["role"]

experience = body["experience"]

job_description = generate_job_description(skills, role, experience, generative_ai_client)

return response.Response(ctx, response_data=job_description)

except Exception as handler_error:

logging.getLogger().error(handler_error)

return response.Response(

ctx,

status_code=500,

response_data="Processing failed due to " + str(handler_error)

)

Deploy the Function, using

fn -v deploy --app Function Application Name

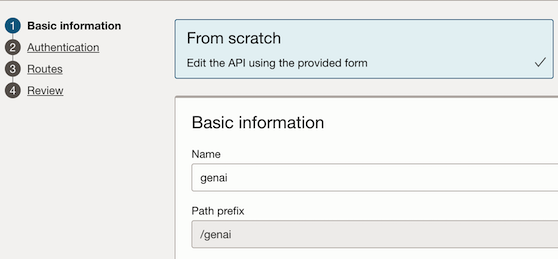

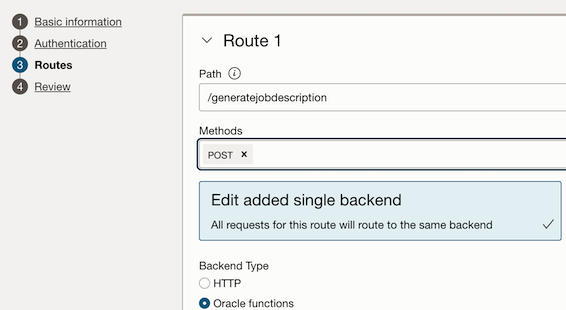

3. Expose the Function using API Gateway.

Create an API Gateway deployment.

Create a new Route. Enter a Path, Method as POST, and Select Back End type as Function.

Choose the fn_genai_jobdescription Function as the Function to invoke.

Note down the deployment URL. This will be used in the Service Connection settings within Visual Builder.

The sample REST API call using the API gateway deployment URL will look as below.

curl --location 'https://af...t3ea.apigateway.us-ashburn-1.oci.customer-oci.com/genai/generatejobdescription' --header 'Content-Type: application/json' --data '{"skills":"java,spring","experience":"3+ year","role":"developer","responsibilities":"Analyzing user requirements to inform application design.Developing and testing software."}'

Visual Builder

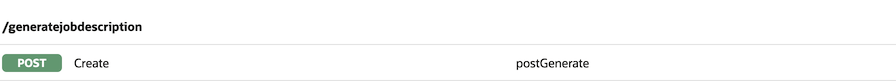

1. Create a service connection to the API Gateway endpoint as shown below.

2. Create a page with input fields such as skills, role, years of experience, job responsibilities, and a button with an Action Chain invoking the service connection endpoint.

3. Clicking on the Generate Button will invoke the OCI Generative AI to generate a job description based on the values provided in the Skills, Experience, Role, and Responsibilities input.

Conclusion

The potential applications that can be created by combining OCI Generative AI, Langchain, and other OCI services are boundless—unleash your creativity and explore the vast possibilities that lie ahead!