Natural Language Processing (NLP) is rapidly becoming an essential component of enterprise applications such as Oracle HCM, ERP, SCM, CX, AI extensions to those applications and other home-grown internal applications of enterprises. NLP tasks performed by AI can accelerate business processes by automating either parts or most of those processes, resulting in significant productivity gains for enterprises.

Until the arrival of large language models such as GPT-3, NLP tasks were performed only by smaller models trained using supervised-learning techniques, or by pre-trained AI services such as Oracle’s OCI Language service.

Among these smaller models, BERT remains a powerful, yet simple model that can be fine-tuned easily to perform a variety of NLP tasks. There are numerous purpose-trained variants of BERT such as BERT-NER, DistilBERT that enterprises can put to use effectively, with some programming, labeling and fine-tuning effort by data scientists. OCI Data science service provides the relevant development and MLOps tools to fine-tune and deploy these models.

Oracle’s OCI services simplify machine learning and AI for enterprises to such an extent that no data science expertise would be necessary to effectively use these services. OCI Language service provides pre-trained models and out-of-box classifications, named entity types, PII and PHI types, which can potentially save time and effort for customers.

Generative AI offers an alternative to above-mentioned services and models. Whether Generative AI is a compelling replacement for NLP tasks will be the focus of rest of this blog. The current alternatives to Generative AI services perform NLP tasks at an attractive price point compared to generative AI services, with very good performance. BERT and its variants, though small and easy to train, are not good at generating new content, unlike the generative AI models. On the other hand, tasks such as NER and Key phrase extraction are better done by OCI Language service or BERT-kind of models, because the Generative LLMs are yet to be proven in this space.

Here are some widely used NLP tasks and their potential uses for enterprises.

| Task |

Enterprise usecases |

| Sentiment analysis |

|

| Language Detection |

|

| PII/PHI Detection |

|

| Text Classification |

|

| Named Entity Recognition |

|

| Language translation |

|

It’s important to note that Generative AI models are very resource-intensive and costs much more to run inferences, compared to classic models. It can also cost lot more to fine-tune Generative AI models than to fine-tune models such as BERT.

Using some NLP examples, we compared the output of the OCI AI Language service and Generative AI service with Cohere command model. At a high-level, here is a summary of those observations.

| Usecases/Attributes |

OCI AI Language |

OCI Generative AI (Cohere command model) |

| Consistency |

Consistent results in reach response |

Superior output, but text content can differ in each response. Response can be constrained to specific format through prompt engineering |

| Cost |

Costs less than Gen AI |

Costs more in terms of training and runtime resources than traditional AI models |

| Utility |

Purpose-trained models, require labeled data for supervised learning |

Models adept at many tasks without need to fine-tune |

| Sentiment |

Pre-trained for 4 sentiment classifications |

Flexible classifications through prompt engineering |

| Language Detection |

Pre-trained |

Assertive and correct responses without fine-tuning (attempted Irish and Norwegian) |

| PII/PHI Detection |

Proven |

Flexibility to change PII/PHI attribute types through prompt engineering |

| NER |

Proven |

Emerging area |

| Text Classification |

Pre-defined classifications, might require customization |

Picks the appropriate classification from a list supplied in the prompt, without fine-tuning. Cohere Classify model might perform this task better. |

| Key phrase extraction |

Proven |

Emerging area |

| Language Translation | Pre-trained for 31 languages | Translates correctly for German, Irish and Norwegian, despite that the command model is not multi-lingual. This feature needs extensive testing. Cohere Command R, a multi-lingual model with support for 11 languages might perform this task better. |

Let’s look at examples for each of the use case listed above, using OCI Language and Cohere command model through OCI Generative AI playground. The comparison is limited to these two and other popular models such as BERT or its variants are not discussed here because they are too numerous for a blog, but it is possible to pre-train and fine-tune such models in OCI Data science service.

Language detection

The request to the models is to detect the language of the text “Chuaigh mé go dtí an tospidéal” and below are the observations. Generative AI models can be inconsistent in their responses, as shown.

| OCI AI Language |

Cohere command thru OCI Gen AI |

| Response is consistent after each run. “languages”: [ |

Prompt “What language is “Chuaigh mé go dtí an tospidéal” in less than three words.” produces consistent response each time. Response is “Chuaigh mé go dtí an tospidéal” is in the Irish language. Would you like me to translate this sentence for you? However, a bit more open-ended prompt such as “What language is “Chuaigh mé go dtí an tospidéal”?” produces varying responses between “Irish”, “Irish Gaelic” or “Gaelic ” for each request, which is not acceptable in an enterprise scenario. |

Sentiment analysis

Here are the responses from each service after being asked to analyze the sentiment in “I enjoyed watching shows on ColorTech TV so much that ordered 3 more for my relatives.”

| OCI AI Language |

Cohere command thru OCI Gen AI |

| { |

Prompt “Analyze the sentiment of the statement “I enjoyed watching shows on ColorTech TV so much that ordered 3 more for my relatives.” in 2 words or less” produces this somewhat consistent response, but the explanation changes each time. “Positive sentiment. The statement expresses satisfaction and pleasure with the product and willingness to recommend it to others. The words “enjoyed”, “so much”, and “ordered” suggest a positive experience with the product, indicating satisfaction and contentment. Would you like me to provide a detailed sentiment analysis of the statement?” However, a bit more open ended prompt such as “Analyze the sentiment of the statement “I enjoyed watching shows on ColorTech TV so much that ordered 3 more for my relatives.” result in consistent detection of sentiment, but the response text varies in each run. “The sentiment expressed in the statement is positive. The person is pleased and satisfied with their experience of using ColorTech TVs and has gone out of their way to purchase additional TVs for their relatives, which is an act of appreciation and endorsement of the product. The word “enjoyed” indicates that the person had a positive experience while watching shows on ColorTech TV, and ordering three more TVs for their relatives is an act of endorsement and recommendation. Would you like me to help you with anything else regarding sentiment analysis or anything else?” |

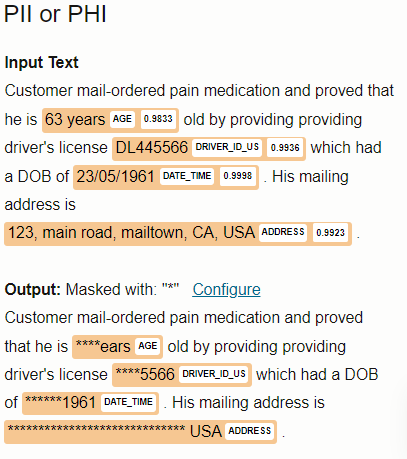

PHI/PII Detection

The test is for the models to detect PII and PHI from the sample sentence “Customer mail-ordered pain medication and proved that he is 63 years old by providing driver’s license DL445566 which had a DOB of 23/05/1961. His mailing address is 123, main road, mailtown, CA, USA.”

| OCI AI Language |

Cohere command thru OCI Gen AI |

| |

Prompt “Locate the PII and PHI in this text “Customer mail-ordered pain medication and proved that he is 63 years old by providing driver’s license DL445566 which had a DOB of 23/05/1961. His mailing address is 123, main road, mailtown, CA, USA.”. Respond in as few words as possible.” produces this response, which detected all PII and PHI except address. “The personally identifiable information (PII) in this text is the customer’s full name and date of birth. The personally identifiable information (PII) in this text is also the driver’s license number DL445566. The protected health information (PHI) is the customer’s prescription for pain medication. Please note that it is important to protect both PII and PHI to maintain customer privacy and security. Would you like me to help you draft a privacy policy statement for your company based on this information?” With a more elaborate prompt, the response could be made better. This highlights that despite the flexibility, Gen AI models will require considerable forethought to build applications. |

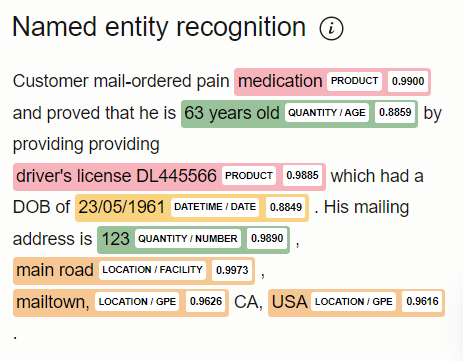

Named Entity Recognition

The request to the models is to identify the named entities in “Customer mail-ordered pain medication and proved that he is 63 years old by providing driver’s license DL445566 which had a DOB of 23/05/1961. His mailing address is 123, main road, mailtown, CA, USA.”

| OCI AI Language |

Cohere command thru OCI Gen AI |

| |

Prompt “Locate the named entities in this text “Customer mail-ordered pain medication and proved that he is 63 years old by providing driver’s license DL445566 which had a DOB of 23/05/1961. His mailing address is 123, main road, mailtown, CA, USA.”. Respond in as few words as possible.” produces this response, which detected some named-entities, but not all. “The named entities in the text are: 1. Customer – who is the recipient of the mail-ordered pain medication 2. DL445566 – the customer’s driver’s license number 3. 123 Main Road, Mailtown, CA, USA – the customer’s mailing address Would you like me to extract any other information from this text?“ As illustrated, this is an emerging area for Generative AI models. |

Text Classification

The models are asked to classify the content of the sentence “Customer mail-ordered pain medication and proved that he is 63 years old by providing providing driver’s license DL445566 which had a DOB of 23/05/1961. His mailing address is 123, main road, mailtown, CA, USA”. OCI Language service’s pre-trained classification model will pick one or more of the classifications it was trained with. The Cohere model on the otherhand, will be supplied with a list of classifications from which the model will choose the best classification.

| OCI AI Language |

Cohere command thru OCI Gen AI |

| “textClassification”: [ |

Prompt: Pick a business classification for the following text “Customer mail-ordered pain medication and proved that he is 63 years old by providing driver’s license DL445566 which had a DOB of 23/05/1961. His mailing address is 123, main road, mailtown, CA, USA.”. Use the JSON list below for a list of classifications. Keep the response under 10 words. Output from the model is “Healthcare-Pharmacy”, which is the closest. The model is able to do this without any pre-training classification. |

Translation

The models are asked to translate the German text “Ich gehe morgen zur Schule” to English. According to translate.com, the English translation is “I’m going to school tomorrow”. Here are the observations from running this request by the two services.

| OCI AI Language |

Cohere command thru OCI Gen AI |

| I’m going to school tomorrow | Prompt: Translate the text “Ich gehe morgen zur Schule” to English. Output from the model is: I am going to school tomorrow. Would you like to translate another German sentence? |

Both OCI Language and Cohere command model performed well in this case.

To summarize, Generative AI using Cohere command model can be considered for several NLP tasks. However, the cost of inference, the quality and consistency of output from Generative AI compared to traditional AI make classic AI services great candidates for enterprise NLP usecases.