As architects, we rarely encounter simple, one-size-fits-all network designs. Real-world deployments often evolve over time, with new requirements layered onto existing production environments. In this post, I’ll walk through a customer use case that demonstrates how to isolate Oracle Services Network (OSN) traffic from internet-bound traffic in an OCI hub-and-spoke architecture without disrupting on-premises routing.

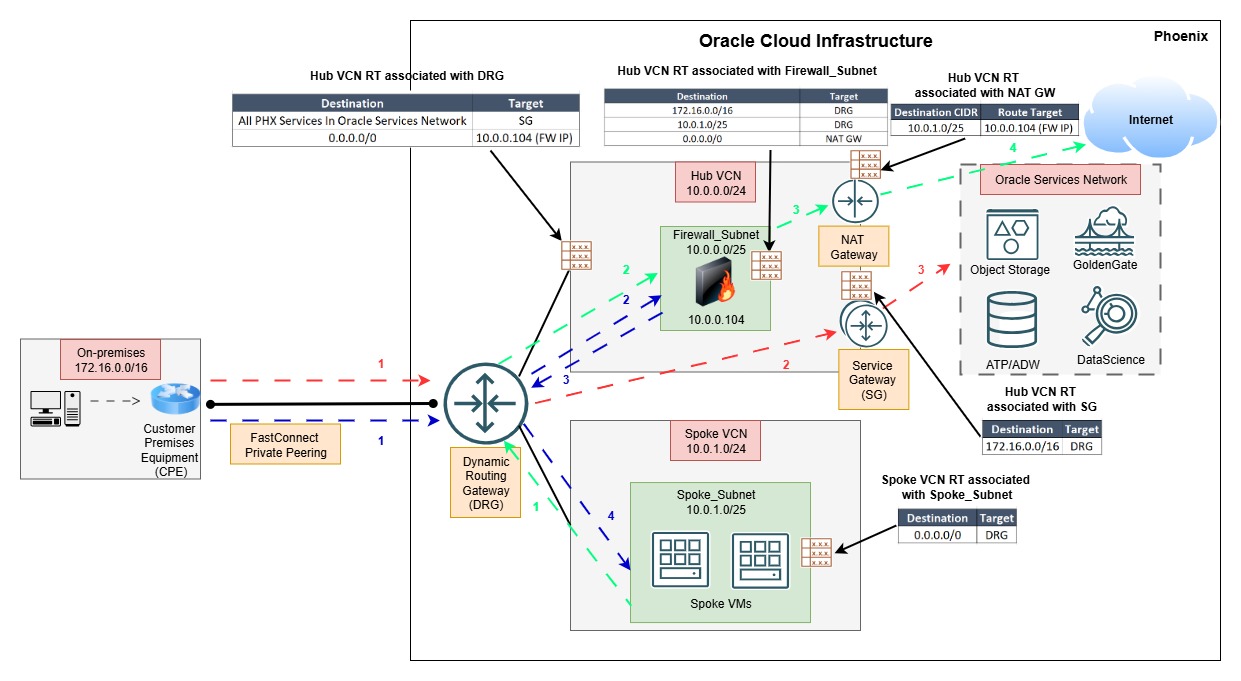

Initial Architecture

The customer began with a straightforward setup:

- Transit connectivity to OSN via FastConnect private peering between on-premises and OCI.

- A single VCN with standard routing to OSN.

New Requirements: The environment needed to evolve to support

Firewall insertion in the hub VCN and new spoke VCN with private subnets – All on-premises traffic to the spoke VMs must pass through the hub firewall for IDS/IPS inspection.

Internet access from spoke VMs via the hub firewall and NAT gateway – By default, internet traffic was routed back to on-premises, but now it had to flow through OCI.

OSN traffic bypasses the firewall – Critical to maintaining performance and simplicity.

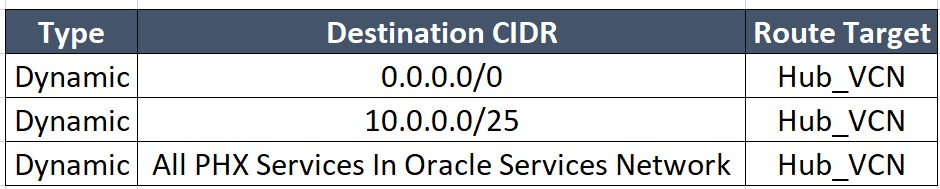

Route Table for each DRG attachment:

Virtual Circuit:

Hub_VCN:

Spoke_VCN:

![]()

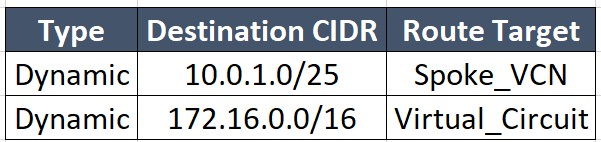

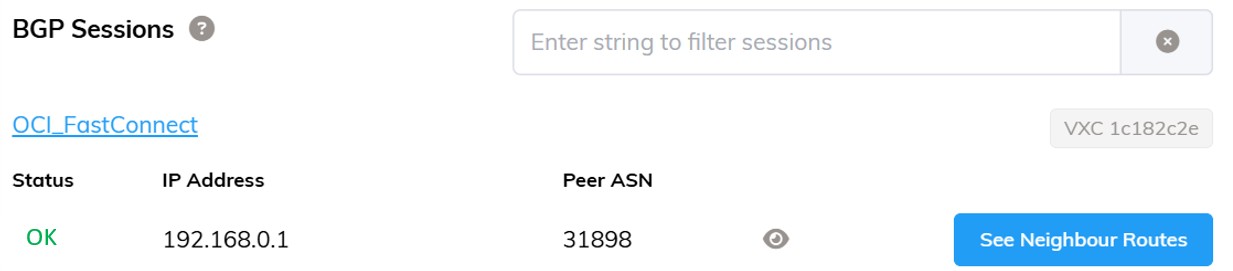

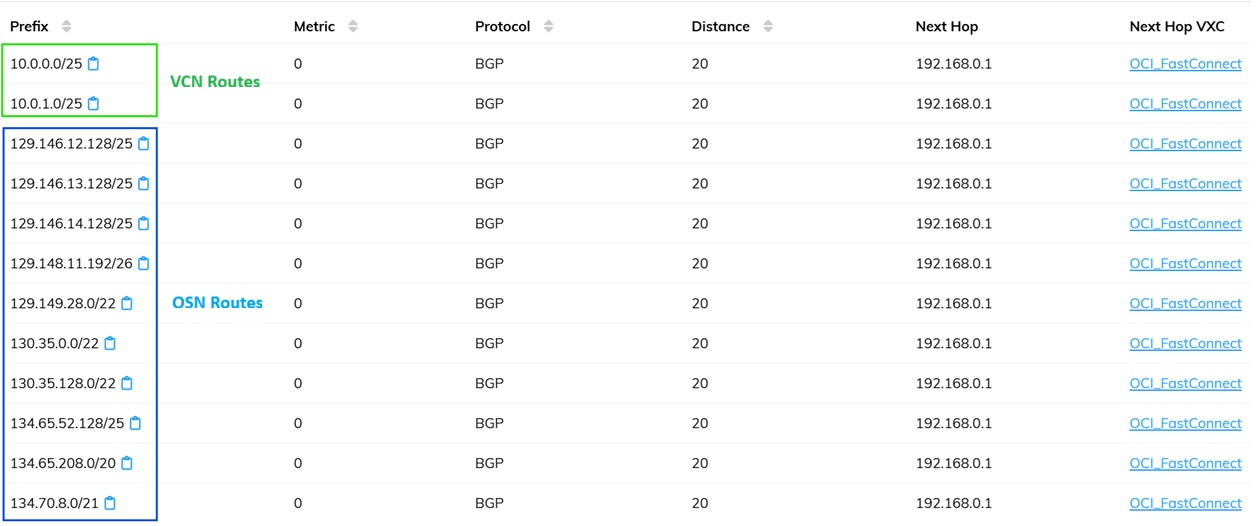

On-premises Route Table (OCI via BGP):

The Challenge:

Adding a default route (0.0.0.0/0) to the hub VCN route table for the firewall/NAT gateway caused unintended side effects:

- DRG route tables began importing the quad-zero route.

- FastConnect BGP then advertised this route back to on-premises.

- The customer’s on-premises default routing was redirected via OCI.

We did explore options such as:

- BGP route filtering – Not acceptable since the customer couldn’t change their on-premises setup.

- Static routes for OSN services – Impractical because multiple OSN endpoints had no fixed IPs.

- Removing the quad-zero route – Would break internet access from spoke VMs.

The Solution: Introducing a Dedicated Service VCN

We created a separate Service VCN dedicated to OSN traffic. The hub VCN continued handling internet and firewall traffic, but no longer managed OSN routes directly.

Key changes:

- Import only Service VCN routes into the DRG Virtual Circuit attachment.

- Add static routes for the hub and spoke VCN subnets with the hub attachment as the target.

This architecture achieved three critical goals:

- No default route (0.0.0.0/0) in the DRG Virtual Circuit – Preventing unwanted route propagation to on-premises.

- Firewall inspection for spoke VMs – All traffic from on-premises still flowed through the hub firewall.

- Internet access from spoke VMs via hub firewall/NAT gateway – Meeting the new internet breakout requirement.

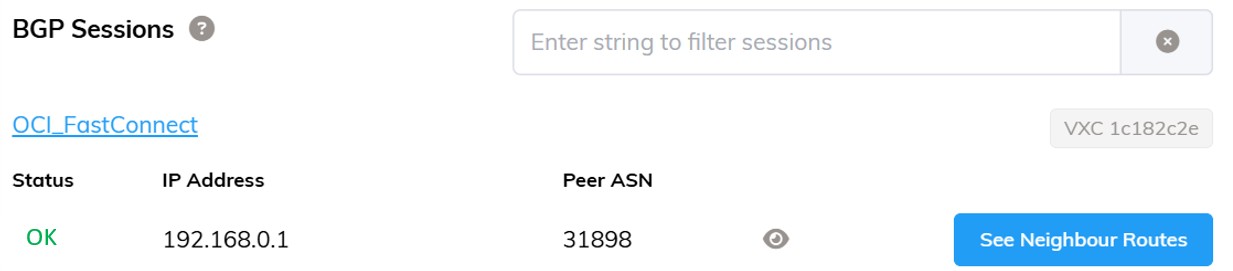

Route Table for each DRG attachment:

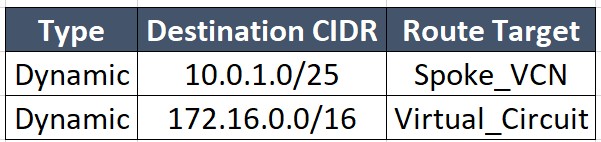

Virtual Circuit:

Service_VCN:

![]()

Hub_VCN:

Spoke_VCN:

![]()

On-premises Route Table (OCI via BGP):

By separating OSN traffic into its own VCN, we eliminated routing conflicts while meeting all customer requirements:

- Secure and compliant packet inspection.

- Internet access through the hub firewall.

- Unchanged on-premises routing policies.

Conclusion:

By separating the OSN traffic into a dedicated Service VCN, we successfully eliminated the routing conflicts caused by the quad zero route. This approach ensures secure firewall inspection for spoke VMs, allows internet access, and keeps the on-premises routing environment unchanged, meeting all customer requirements efficiently.