Introduction

Enterprise Scheduler Service (ESS) is the backbone of batch and background processing in Oracle Fusion applications. Monitoring ESS jobs is critical to ensure business continuity, data freshness, SLA adherence, and operational confidence.

Enterprise customers rely on thousands of Oracle Fusion ESS jobs to run payroll, financial close, integrations, billing, and compliance workloads.

Traditional ESS monitoring techniques include:

- ESS Scheduled Processes UI (reactive, manual)

- ESS Scheduler REST APIs (operational but limited analytics)

- Database queries on ESS history tables (powerful but not operationalized)

However, these approaches lack trend detection & analysis and proactive risk detection, failures are detected after business impact, performance degradation goes unnoticed for weeks, SLA breaches are reactive, not preventable.

This leads to outages, escalations, audit risks.

Objective

The objective of the ESS Job Health & Risk Observatory is to provide an industry-aligned, proactive, and decision-oriented monitoring framework.

- Detect failures, delays, and anomalies early

- Provide SLA and performance intelligence

- Reduce noise and focus on actionable risks

The ESS Job Health & Risk Observatory changes Fusion ESS monitoring from simple job tracking into actionable operational intelligence. Instead of reacting to failures, customers gain clear visibility into current job health, emerging risks, and future failure trends using identified key operational metrics (E.g… Average runtime, Success Rate, Failure Rate, Runtime volatility, Failure probability, SLA breach probability, Stuck Job probability, Job Regression, Job risk score).

With more than 30 purpose-built widgets and queries, the dashboard reveals patterns that are not visible in standard ESS views. These include runtime drift, recurring failures, schedule congestion, SLA exposure, and abnormal execution behavior.

A key capability of the observatory is its rule-based detection and alarm framework. Using Detection rules for any event or risk condition, such as repeated failures, abnormal duration spikes, missed schedules, SLA breaches. These rules drive automatic alerts and alarms, ensuring teams are notified early and consistently when risk thresholds are crossed.

By combining real-time execution signals with historical trends and behavioral analysis, teams can detect issues early, reduce alert noise, and focus only on what matters. This enables a shift from firefighting to prevention, with better confidence in scheduling, capacity planning, and SLA commitments.

When enriched with OCI Log Analytics AI (LoganAI), the same insights support anomaly detection and faster root-cause analysis

This dashboard delivers derived intelligence, not raw data, giving customers foresight instead of hindsight.

In this blog, we explain how this dashboard can be designed and implemented using the underlying tools and analytical techniques that make predictive ESS observability possible.

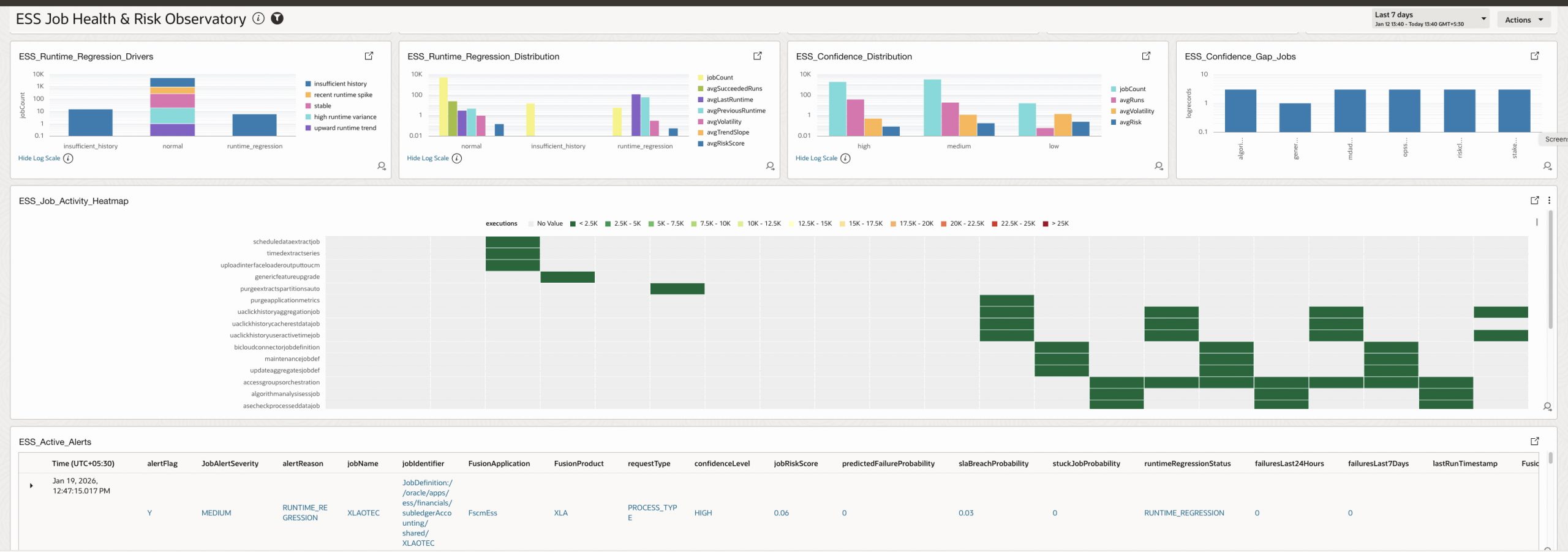

ESS Job Health & Risk Observatory

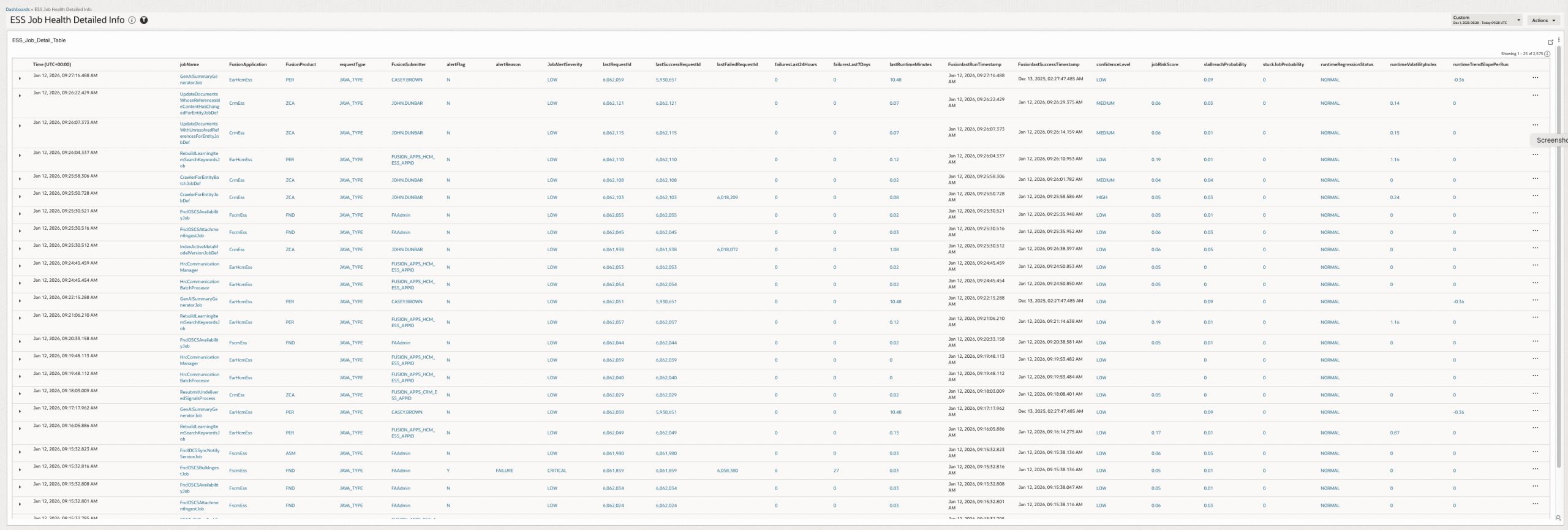

ESS Job Health Detailed Info (Drill Down Report)

Key Metrics

Each metric answers a concrete operational question and maps directly to an action, this is explained in details in section 1.3 ESS Job Health Signal Calculation

| Metric | What It Represents | Why It Is Useful for Customers |

| Average Run time Minutes | Average runtime of successful executions within the monitoring window. | Short-term performance tracking Drift detection |

| Standard Deviation Run time Minutes | Standard deviation of job runtimes within the monitoring window. | It measures how much a job’s runtime varies from run to run. If a job always takes about the same time, this value is low If a job sometimes runs fast and sometimes very slow, this value is high It tells you runtime consistency, not speed. |

| Average Run time Baseline Minutes | Typical job runtime – Long-term historical average runtime. | Baseline for planning and benchmarking |

| P95 Run time Minutes | Expected worst-case runtime – Upper runtime bound for normal behavior. | SLA design, alert thresholds, capacity sizing |

| Runtime Trend Slope | Direction and strength of runtime change over time – Is the job getting slower over time? | • Detects gradual degradation not visible in single runs • Early warning before SLAs or failures occur • Essential for predictive observability |

| Runtime Volatility Index | Degree of runtime variability for a job – Is runtime becoming unpredictable? | • High volatility = unstable job behavior • Helps explain why SLAs are breached intermittently • Critical for confidence scoring and alert trustworthiness |

| Run Time Regression Status | Detects performance degradation between consecutive runs. | Early signal of data growth Detects degradation before SLA breach |

| Predicted Failure Probability | Likelihood that the next execution of an ESS job will fail | • Predicts risk before failure happens • Avoids reacting only after incidents • Helps prioritize proactive remediation for high-risk jobs even if last run succeeded |

| SLA Breach Probability | Probability that a job’s runtime exceeds its historical SLA baseline | How likely is this job to run longer than what is considered normal for it? • Identifies jobs that are silently drifting toward SLA breaches • Useful for capacity planning and SLA governance |

| Stuck Job Probability | Probability that a currently running job is “stuck” | • Highlights jobs that may never complete • Enables early termination or escalation • Prevents resource starvation and cascading delays |

| Composite Job Risk Score | Unified risk indicator combining multiple failure dimensions – Single number summarizing job health | • Single, explainable risk number for dashboards • Enables sorting, bucketing, and alert thresholds • Avoids alert fatigue by combining signals |

| Confidence Score Weighted | A numeric confidence score (0–1) used internally for: Threshold tuning, Alert gating, Observability & dashboards | Allows fine-grained analysis beyond LOW / MEDIUM / HIGH Enables future adaptive thresholds |

| Confidence Level (LOW / MEDIUM / HIGH) | Trustworthiness of computed metrics – How reliable the prediction is ? | • Prevents false alerts on sparse data • Allows alerts only when signals are reliable • Increases customer trust in observability outputs |

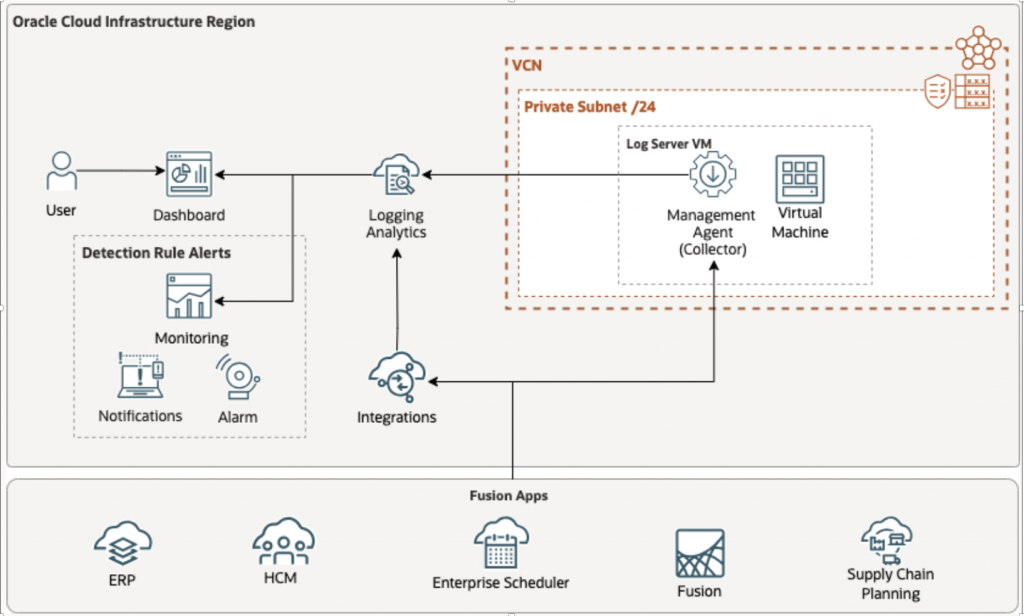

High Level Architecture

- BI Analytics report to compute, aggregate & extract ESS metrics data from Fusion tables

- Create Integration (OIC) to Expose BI metrics report as JSON

- Configure OCI Logging Analytics to ingest metrics from OIC Integration

- Design OCI Dashboard widgets & OCI Dashboard – ESS Job Health & Risk Observatory

- Define Detection Rules using alert flags and thresholds

- Configure OCI Alarms for proactive notifications

- Additionally – Analyze data using LoganAI

Data Flow

Fusion Applications → BI Publisher → OIC REST API → OCI Management Agent → OCI Log Analytics → Widgets/Dashboards → Detection Rules → Alarms !

Detailed Steps

1 – BI-Layer

The BI Analytics–driven approach is intentionally chosen because it enables historical baselining with statistical modeling.

This solution does not replace ESS APIs instead, it elevates ESS monitoring from reactive status checks to predictive job health intelligence.

BI is explicitly designed to bring aggregate Job data at controlled intervals and return only pre-computed summaries, ensuring negligible performance impact on Fusion therefore data volume remains low which brings –

- Time-series analytics

- Historical analysis

- Scheduled batch data access

- Derived metrics

Follow Quick Links to jump over topics

- Report columns

- Query parameters

- ESS Job Health signal calculations

- Alert engine

- Report design & development (Data model query & layout)

1.1- Report Columns

Column Legend – Computation Scope

- Windowed → Uses

:P_FROM_TIMESTAMP→:P_TO_TIMESTAMP - Cumulative (as of TO) → Uses data up to

:P_TO_TIMESTAMP - Derived → Parsed / inferred metadata

- Calculated → Computed using formulas, statistics, or rules

| Job Identity & Metadata | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| JOB_IDENTIFIER | Unique ESS job technical key | Primary identifier, joins | Derived | ESS_REQUEST_HISTORY.DEFINITION |

| JOB_NAME | Human-readable job name | UI display | Derived | Extracted from definition path |

| APPLICATION | Fusion application | App filtering | Derived | ESS metadata |

| PRODUCT | Fusion product | Product analytics | Derived | ESS metadata |

| REQUEST_TYPE | Job request type | Execution classification | Derived | ESS request type |

| PROCESSGROUP | ESS process group | Capacity analysis | Derived | ESS metadata |

| SUBMITTER | Job submitter | Audit & RCA | Derived | ESS metadata |

| SCHEDULED | Scheduled vs ad-hoc | Execution behavior | Derived | ESS flag |

| JOB_DEDUPE_KEY | Unique job-run key | Alert deduplication | Derived | Request ID || DEFINITION |

| Execution Timing & Request Details | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| LAST_RUN_TIMESTAMP | Latest execution start | Freshness checks | Windowed | Max PROCESSSTART |

| LAST_RUN_END_TIME | Latest execution end | Runtime calc | Windowed | PROCESSEND |

| REQUEST_START_TIME | Requested start time | Schedule adherence | Windowed | REQUESTEDSTART |

| REQUEST_EVENT_TIME | Analytics event time | Incremental ingestion | Windowed | Alias of last run time |

| REQUEST_COMPLETE_TIME | Completion timestamp | SLA checks | Windowed | COMPLETEDTIME |

| REQUESTID | Latest request ID | Drill-down | Windowed | Highest request ID |

| PARENTREQUESTID | Parent request | Job hierarchy | Derived | ESS metadata |

| Success & Runtime History | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| LAST_SUCCESS_TIMESTAMP | Most recent success | Reliability signal | Cumulative (as of TO) | Max success COMPLETEDTIME |

| LAST_RUNTIME_MINUTES | Last success runtime | Performance tracking | Cumulative | (END − START) × 1440 |

| PREVIOUS_RUNTIME_MINUTES | Prior success runtime | Regression detection | Cumulative | 2nd latest success |

| RUNTIME_REGRESSION_STATUS | Runtime degradation | Predictive alerts | Calculated | Compares last vs previous using regression factor |

| Baseline & Statistical Metrics | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| AVG_RUNTIME_BASELINE_MIN | Historical mean runtime | SLA baseline | Cumulative | AVG(success runtimes) |

| P95_RUNTIME_BASELINE_MIN | 95th percentile runtime | SLA threshold | Cumulative | PERCENTILE_CONT(0.95) |

| AVG_RUNTIME_MINUTES | Avg runtime (window) | Trend analysis | Windowed | AVG(success only) |

| STDDEV_RUNTIME_MINUTES | Runtime deviation | Stability analysis | Windowed | STDDEV(success only) |

| RUNTIME_VOLATILITY_INDEX | Runtime instability | Risk scoring | Calculated | STDDEV / AVG |

| RUNTIME_TREND_SLOPE_PER_RUN | Runtime trend | Capacity planning | Cumulative | Linear regression slope |

| Execution & Failure Metrics | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| TOTAL_RUNS_CUMULATIVE | Total executions | Job criticality | Windowed | COUNT(1) |

| FAILED_RUNS | Failures | Reliability | Windowed | STATE IN (10,15,18,19) |

| SUCCEEDED_RUNS | Success count | Confidence | Windowed | STATE IN (12, 17) |

| RUNNING_RUNS | Active runs | Load | Windowed | STATE = 3 |

| BLOCKED_RUNS | Blocked jobs | Dependency | Windowed | STATE = 5 |

| CANCELLED_RUNS | Cancelled | Operational | Windowed | STATE IN (7,9) |

| WARNING_RUNS | Warning | Confidence | Windowed | STATE = 11 |

| QUEUE_PRESSURE | Queue | Reliability | Windowed | STATE IN (1,2,6,13,14,20) |

| FAILURES_LAST_24_HOURS | 24h failures | Immediate alerting | Calculated | Rolling 24h window |

| FAILURES_LAST_7_DAYS | 7-day failures | Trend analysis | Calculated | Rolling 7-day window |

| ADHOC_COUNT | Adhoc Count | Load distribution | Windowed | Jobs executed by Adhoc Submissions |

| SCHEDULE_COUNT | Scheduled Count | Load distribution | Windowed | Jobs executed by Scheduled Submissions |

| Running & Stuck Job Indicators | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| CURRENTLY_RUNNING_COUNT | Active job count | Capacity | Cumulative | Count of active states |

| MAX_RUNNING_DURATION_HOURS | Longest runtime | Hung job detection | Calculated | Elapsed runtime |

| STUCK_JOB_PROBABILITY | Stuck likelihood | Critical alerts | Calculated | Compared vs P90 / P95 |

| Predictive & Risk Analytics | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| PREDICTED_FAILURE_PROBABILITY | Next-run failure risk | Proactive alerting | Calculated | Bayesian smoothing |

| SLA_BREACH_PROBABILITY | SLA violation chance | SLA alerts | Calculated | % runs > P95 |

| JOB_RISK_SCORE | Composite risk score | Alert prioritization | Calculated | Weighted multi-factor formula |

| CONFIDENCE_LEVEL | Signal reliability | Noise suppression | Calculated | Run count + volatility + trend |

| CONFIDENCE_SCORE_WEIGHTED | Numeric confidence | Threshold tuning | Calculated | Weighted scoring formula |

| Scheduling Intelligence | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| SCHEDULE_START_TIME | Schedule start | SLA alignment | Derived | Parsed from XML |

| SCHEDULE_END_TIME | Schedule end | Overdue detection | Derived | Parsed from XML |

| SCHEDULE_FREQUENCY | Frequency | Load analysis | Derived | Parsed from XML |

| SCHEDULE_INTERVAL | Interval | High-frequency detection | Derived | Parsed numeric |

| SCHEDULE_ENDED_FLAG | Schedule ended | Alert suppression | Calculated | ESS terminal state |

| SCHEDULE_OVERDUE_FLAG | Schedule overdue | Critical alert | Calculated | End < now & not ended |

| HIGH_FREQUENCY_SCHEDULE_FLAG | Very frequent jobs | Load/cost alerts | Calculated | MINUTELY / HOURLY ≤ 60 |

| Request Diagnostics | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| LAST_REQUEST_ID | Latest request | Drill-down | Cumulative | MAX(requestid) |

| LAST_SUCCESS_REQUEST_ID | Last success | RCA | Cumulative | MAX success |

| LAST_FAILED_REQUEST_ID | Last failure | RCA | Cumulative | MAX failure |

| LONGEST_RUNNING_REQUEST_ID | Longest active run | Hung job debug | Calculated | Max runtime |

| Alert Decision Outputs | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| ALERT_FLAG | Alert decision | OCI alarms | Calculated | Rule engine |

| ALERT_REASON | Root reason | Operator clarity | Calculated | Failure / SLA / Risk |

| ALERT_SEVERITY | Severity level | Escalation | Calculated | Risk-based severity |

| Meta Fields | ||||

| Column Name | Description | How It Is Used | Computation Scope | Calculation / Derivation |

| META_FROM_TIMESTAMP | Query start | API traceability | Input | Parameter |

| META_TO_TIMESTAMP | Query end | API traceability | Input | Parameter |

| META_GENERATED_AT | Execution time | Audit | Calculated | SYSTIMESTAMP UTC |

| META_RECORD_COUNT | Record count | Pagination | Calculated | COUNT(*) OVER() |

1.2 – Query Parameters

The ESS Job Health & Risk Observatory uses a small set of tunable control parameters to balance sensitivity, accuracy, and operational noise.

These parameters do not change raw execution data , they influence how predictive intelligence and alerts are derived from it.

They can be adjusted to align with specific risk appetite, job criticality, and data maturity.

Below Parameters have default value but can be set as per specific needs, these parameters do not affect job execution, they do not increase ESS or Fusion load, they operate on aggregated, periodic snapshots ,this design ensures predictive intelligence without performance impact on Fusion applications.

| Parameter | Description | Default | Meaning / Impact | How Used in Query |

| :P_FROM_TIMESTAMP | Monitoring window start | Required | Defines time window lower bound | Filters windowed metrics |

| :P_TO_TIMESTAMP | Monitoring window end | Required | Snapshot time for metrics | Anchor for all calculations |

| :P_REGRESSION_FACTOR | Runtime regression sensitivity | 1.5 | Detects runtime degradation | Last vs previous runtime |

| :P_MIN_SUCCESS_RUNS | Minimum success runs | 3 | Confidence eligibility | Confidence calculation |

| :P_STUCK_JOB_PROBABILITY | Stuck alert threshold | 0.8 | Hung job detection | Alert rules |

| :P_JOB_RISK_SCORE | Risk alert threshold | 0.6 | High-risk jobs | Alert rules |

| :P_SLA_BREACH_PROBABILITY | SLA alert threshold | 0.4 | SLA risk tolerance | Alert rules |

P_REGRESSION_FACTOR

Purpose: Controls sensitivity to runtime regressions between consecutive successful runs.

What it means:Defines how much slower a job must run (compared to its previous successful run) before it is classified as a runtime regression.

Default behavior: Default value (e.g. 1.5) means the job must run 50% longer than its previous run to be flagged.

When to tune:

- Lower value (e.g. 1.2):

Detects smaller performance degradations earlier (more sensitive). - Higher value (e.g. 2.0):

Flags only severe regressions (less noise). - Recommended usage: Keep default unless jobs are highly latency-sensitive.

P_MIN_SUCCESS_RUNS

Purpose: Ensures statistical confidence before applying predictive logic.

What it means:Minimum number of successful executions required before, Runtime trend analysis, Volatility calculation, Confidence scoring calculations are considered reliable.

Default behavior: Jobs with fewer than this number are marked LOW confidence.

When to tune:

- Lower value:

Useful in new environments with limited history. - Higher value:

Improves accuracy in mature, high-volume systems. - Recommended usage: Do not reduce below 3 unless absolutely necessary.

P_STUCK_JOB_PROBABILITY

Purpose: Threshold for flagging a running job as potentially stuck.

What it means:Represents how far current runtime has exceeded historical norms (P95 baseline).

Default behavior:Alerts triggered only when probability crosses a high threshold (e.g. 0.8).

When to tune:

- Lower value:

Early warning for long-running jobs. - Higher value:

Alerts only when jobs are clearly abnormal. - Recommended usage: Tune lower for business-critical batch windows.

P_JOB_RISK_SCORE

Purpose: Global threshold for composite job risk.

What it means:Defines when a job’s combined risk (failure probability + SLA risk + volatility + trend + stuck risk) becomes actionable.

Default behavior: Typical alert threshold around 0.6.

When to tune:

- Lower value:

Aggressive alerting, proactive operations. - Higher value:

Focus only on high-impact risks. - Recommended usage:Adjust per environment maturity and alert fatigue tolerance.

P_SLA_BREACH_PROBABILITY

Purpose: Controls when predicted SLA violations generate alerts.

What it means:Probability that a job’s runtime will exceed its historical SLA baseline (P95).

Default behavior:Alerts triggered when probability crosses a moderate threshold (e.g. 0.4).

When to tune:

- Lower value:

SLA-driven environments with strict deadlines. - Higher value:

Non-critical or flexible batch workloads. - Recommended usage:Align with business SLA definitions rather than technical limits.

1.3 – ESS Job Health Signal Calculations

This section explains how each analytical signal is derived, why it exists, and how it contributes to job health and alerting decisions.

1.3.1 – AVG_RUNTIME_MINUTES

Average runtime of successful executions within the monitoring window.

How It Is Calculated

AVG(runtime_minutes) WHERE state = SUCCESS AND PROCESSSTART between FROM and TO

Usage

- Short-term performance tracking

- Drift detection

1.3.2 – STDDEV_RUNTIME_MINUTES

Runtime variability within the window.

How It Is Calculated

STDDEV(runtime_minutes) WHERE state = SUCCESS

Usage

- Stability analysis

- Volatility detection

1.3.3 – AVG_RUNTIME_BASELINE_MIN

Long-term historical average runtime.

How It Is Calculated

AVG(runtime_minutes) WHERE state = SUCCESS AND PROCESSSTART <= TO_TIMESTAMP

Usage

- SLA baseline

- Performance expectations

1.3.4 – P95_RUNTIME_BASELINE_MIN

Upper runtime bound for normal behavior.

How It Is Calculated PERCENTILE_CONT(0.95) WITHIN GROUP (ORDER BY runtime_minutes)

Usage

- SLA thresholds

- Stuck job detection

- SLA breach probability

1.3.5 – RUNTIME_TREND_SLOPE_PER_RUN

Indicates whether job runtime is:

• Increasing

• Decreasing

• Stable

How It Is Calculated REGR_SLOPE(runtime_minutes, run_sequence)

Interpretation

| Slope | Meaning |

| ≈ 0 | Stable |

| > 0 | Runtime increasing |

| < 0 | Runtime improving |

Usage

- Detects slow degradation

- Enables capacity planning

DERIVED STABILITY & BEHAVIOR SIGNALS

1.3.6 – RUNTIME_VOLATILITY_INDEX

How unstable job runtime is over time.

How It Is Calculated STDDEV_RUNTIME / AVG_RUNTIME

• Normalized

• Capped at 1.0 in downstream usage

Interpretation

| Value | Meaning |

| < 0.2 | Very stable |

| 0.2 – 0.5 | Moderate variance |

| > 0.5 | Highly unstable |

1.3.7 – RUNTIME_REGRESSION_STATUS

Detects performance degradation between consecutive runs.

How It Is Calculated

IF previous_runtime IS NULL

→ INSUFFICIENT_HISTORY

ELSE IF

last_runtime > previous_runtime × regression_factor

→ RUNTIME_REGRESSION

ELSE → NORMAL

(Default regression factor = 1.5)

Usage

- Early signal of data growth

Detects degradation before SLA breach

PROBABILITY SIGNALS

1.3.8 – PREDICTED_FAILURE_PROBABILITY

Likelihood that the next execution will fail.

How It Is Calculated failed_runs / total_runs

1.3.9 – SLA_BREACH_PROBABILITY

Represents the likelihood that future executions of an ESS job will exceed its historical SLA threshold

SLA_BREACH_PROBABILITY Meaning

| Value | Meaning |

| < 0.1 | Healthy |

| 0.1 – 0.3 | Watch |

| 0.3 – 0.5 | High risk |

| > 0.5 | Very likely to breach |

How It Is Calculated

IF runtime_minutes > P95_RUNTIME_BASELINE_MIN → SLA breach

SLA_BREACH_PROBABILITY = (Number of successful runs exceeding P95) ÷(Total successful runs)

Usage

- Identifies jobs trending toward SLA failure

- Enables proactive scaling or scheduling changes

1.3.10 – STUCK_JOB_PROBABILITY

Probability that a currently running job is hung.

How It Is Calculated

Compares current runtime against historical percentiles:

| Condition | Probability |

| Runtime > P95 | 0.9 |

| Runtime > P90 | 0.6 |

| Else | 0.1 |

Usage

- Percentile-based (job-specific)

- No hard-coded SLA assumptions

1.3.11 – JOB_RISK_SCORE

A composite health risk score representing the probability that a job will:

• Fail

• Miss SLA

• Become unstable

• Get stuck

Components & Weights

| Signal | Weight |

| Failure probability | 35% |

| SLA breach probability | 25% |

| Runtime volatility | 15% |

| Runtime trend magnitude | 15% |

| Stuck job probability | 10% |

Formula (Conceptual)

JOB_RISK_SCORE = 0.35 × failure_probability+ 0.25 × sla_breach_probability+ 0.15 × volatility_index+0.15 × abs(runtime_trend_slope)+ 0.10 × stuck_probability

Usage

- Single, explainable health number

- Easy alert prioritization

- Works across job types and frequencies

CONFIDENCE SIGNAL

1.3.12 – CONFIDENCE_SCORE_WEIGHTED

A numeric confidence score (0–1) used internally for:

• Threshold tuning

• Alert gating

• Observability & dashboards

How It Is Calculated LEAST(total_runs / 10, 1) * 0.5 + (1 – LEAST(stddev_runtime / avg_runtime, 1)) * 0.3 + CASE WHEN trend_exists THEN 0.2 ELSE 0 END

1.3.13 – CONFIDENCE_LEVEL

Indicates how reliable the computed health signals are for a job.

This prevents false positives for:

• New jobs

• Infrequently run jobs

• Highly unstable runtime patterns

Confidence Components & Weights

| Component | Weight | Meaning |

| Execution history depth | 50% | More runs = higher confidence |

| Runtime stability | 30% | Lower variance = higher confidence |

| Trend availability | 20% | Trend present = higher confidence |

Score Range Mapping

| Score Range | CONFIDENCE_LEVEL |

| ≥ 0.75 | HIGH |

| 0.40 – 0.74 | MEDIUM |

| < 0.40 | LOW |

Why This Works

- Ensures predictions are based on enough data

- Automatically adapts as job history grows

- Eliminates alert noise for new jobs

1.4 – Alert Engine – Decision Logic & Design

The ESS Job Alert Engine evaluates each job’s execution health using a rule-based decision framework enriched with predictive analytics.

The engine combines:

- Hard operational signals (failures, stuck jobs, overdue schedules)

- Soft predictive signals (runtime regression, risk score, SLA breach probability)

- Confidence gating to avoid false positives

The output is a single actionable signal:

- ALERT_FLAG = ‘Y’ → Attention required

- ALERT_FLAG = ‘N’ → Job considered healthy

Alert Engine Design Principles

- Hard signals always win

- If a job is failing or stuck, alert immediately

- Predictive alerts require confidence

- Noisy signals are suppressed when historical data is weak

- Single primary alert reason

- Operators should see why an alert fired

- Severity reflects operational impact

- Not all alerts deserve the same urgency

1.4.1 – ALERT_FLAG – How the Alert Is Triggered

ALERT_FLAG is set to 'Y' if ANY of the following conditions are met.

A. Hard Signals (Immediate Alert)

These signals indicate current operational impact and do not require confidence checks.

A.1 Recent Failures

FAILURES_LAST_24_HOURS>0

- Job has failed at least once in the last 24 hours

Alert Behavior Severity = CRITICAL

A.2 Stuck Job Detection

STUCK_JOB_PROBABILITY>=:P_STUCK_JOB_PROBABILITY

(Default threshold = 0.8)

Meaning

- Running job has exceeded historical P95 runtime

- estimates the likelihood that a currently running job is abnormally over-running relative to its historical behavior, indicating a real-time execution risk that may require operational intervention

- For a job that is currently running, how likely is it that it will never complete normally – real-time, forward-looking, and state-aware.

- Strong indicator of hung threads, deadlocks, or data issues

Alert Behavior Severity = CRITICAL

A.3 Overdue Schedule

SCHEDULE_OVERDUE_FLAG='Y'

Meaning

- Scheduled job did not complete before its defined end time

- SLA breach or missed business cutoff

Alert Behavior Severity = HIGH

B. Predictive / Soft Signals (Confidence-Gated)

These signals predict future or latent risk and require sufficient historical confidence.

B.1 Confidence Gate

CONFIDENCE_LEVELIN('MEDIUM','HIGH')

Meaning

- Prevents alerting on new or unstable jobs

- Reduces false positives

B.2 Runtime Regression

RUNTIME_REGRESSION_STATUS='RUNTIME_REGRESSION'

Meaning

- Last runtime exceeds previous runtime by a regression factor

- Early sign of data growth or performance degradation

Alert Behavior Alert only if confidence is MEDIUM or HIGH

B3. High Composite Risk Score

JOB_RISK_SCORE>=:P_JOB_RISK_SCORE

(Default threshold = 0.6)

Meaning

- Combined risk across failures, SLA, volatility, trends, and stuck probability

- Represents overall job health deterioration

B3. SLA Breach Probability

SLA_BREACH_PROBABILITY>=:P_SLA_BREACH_PROBABILITY

(Default threshold = 0.4)

- Significant likelihood that future runs will exceed SLA

- How often a job historically violates its expected runtime SLA, providing a stable indicator of long-term performance risk.

- Proactive alert before SLA violations occur

- How likely is this job to run longer than what is considered normal for it?

- Based on past successful executions, how often does this job exceed its expected SLA?

ALERT_FLAG Logic

ALERT_FLAG = ‘Y’

IF

FAILURES_LAST_24_HOURS > 0

OR STUCK_JOB_PROBABILITY >= threshold

OR SCHEDULE_OVERDUE_FLAG = ‘Y’

OR ( CONFIDENCE_LEVEL IN (‘MEDIUM’,’HIGH’)

AND (RUNTIME_REGRESSION_STATUS = ‘RUNTIME_REGRESSION’

OR JOB_RISK_SCORE >= threshold

OR SLA_BREACH_PROBABILITY >= threshold

)

) ELSE ‘N’

1.4.2 – ALERT_REASON

How the Primary Reason Is Selected, Only one reason is emitted to keep alerts actionable, The most operationally urgent reason is always selected first.

Precedence Order (Top Wins)

- FAILURE

- STUCK

- SCHEDULE_OVERDUE

- RUNTIME_REGRESSION

- HIGH_RISK_SCORE

- SLA_RISK

CASE

WHEN FAILURES_LAST_24_HOURS > 0 THEN ‘FAILURE‘

WHEN STUCK_JOB_PROBABILITY >= threshold THEN ‘STUCK‘

WHEN SCHEDULE_OVERDUE_FLAG = ‘Y’ THEN ‘SCHEDULE_OVERDUE‘

WHEN RUNTIME_REGRESSION_STATUS = ‘RUNTIME_REGRESSION‘ THEN ‘RUNTIME_REGRESSION’

WHEN JOB_RISK_SCORE >= threshold THEN ‘HIGH_RISK_SCORE‘

WHEN SLA_BREACH_PROBABILITY >= threshold THEN ‘SLA_RISK‘ END

1.4.3 – ALERT_SEVERITY

How Severity Is Determined – Severity reflects business impact and urgency.

| Condition | Severity |

| Recent failures | CRITICAL |

| Stuck job | CRITICAL |

| Schedule overdue | HIGH |

| Risk score ≥ 0.75 | HIGH |

| Risk score ≥ 0.60 | MEDIUM |

| Predictive signals only | MEDIUM |

| No alert | LOW |

CASE

WHEN FAILURES_LAST_24_HOURS > 0 THEN ‘CRITICAL‘

WHEN STUCK_JOB_PROBABILITY >= threshold THEN ‘CRITICAL‘

WHEN SCHEDULE_OVERDUE_FLAG = ‘Y’ THEN ‘HIGH‘

WHEN JOB_RISK_SCORE >= 0.75 THEN ‘HIGH‘

WHEN JOB_RISK_SCORE >= 0.6 THEN ‘MEDIUM‘

ELSE ‘LOW’ END

1.5 – Report Design

These signals intentionally combine short-term windowed behavior with long-term historical baselines, ensuring that alerts are both timely and statistically grounded.

1.5.1 – Create BI Report

- Create Data Model using BI Report Query

- Refer – Creating the Data Model for the BI Publisher Report Extract

https://docs.oracle.com/en/cloud/saas/enterprise-performance-management-common/diepm/integrations_erp_asyn_datamodel.html - Refer – BI Data Model Query

- Refer – Creating the Data Model for the BI Publisher Report Extract

- Create Report Using Data Model

- Refer – Creating the BI Publisher Report Extract on high level steps.

https://docs.oracle.com/en/cloud/saas/enterprise-performance-management-common/diepm/integrations_erp_async_publisher.html , Use XSL Template For Report Layout, so reports generate XML data.

- Refer – Creating the BI Publisher Report Extract on high level steps.

2 – OIC Layer

- Expose BI report via REST facade

- Normalize output

- Enforce security

2.1 – Prerequisites

Fusion BIP Report

- Data model uses SQL query

- Report returns XML output

- Report is tested in Fusion UI

OIC Connectivity

- SOAP Adapter configured using OAuth2.0 mechanism for Fusion BIP Webservice

- OAuth2.0 Client Confidential App to access OIC End points securely.

We have used OAuth2.0 as better Security posture, however same can be done via basic authentication.

Schemas Available

- XSD for:

- BIP SOAP response

- Stage File read operation

- JSON request/response

- Request parameters

- Response payload.

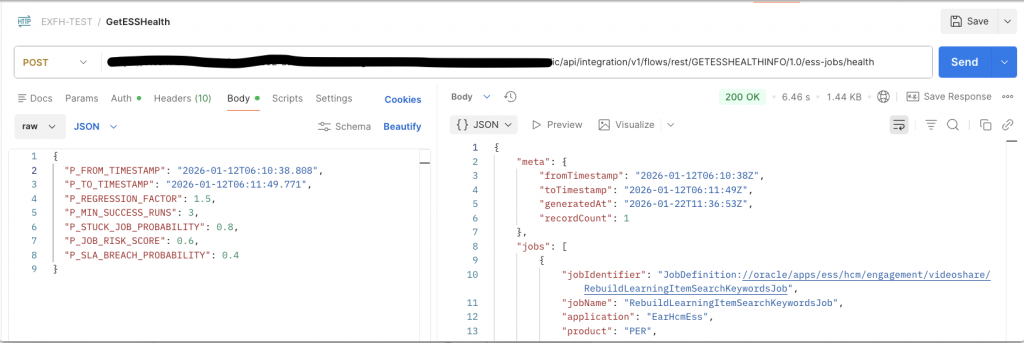

Step 2.2 : Create App Driven Integration

- Navigate to Integrations → Insight → Integrations

- Click Create → App Driven Orchestration

- Provide:

- Integration Name

- Identifier

- Package

- Enable REST Adapter as trigger

This REST endpoint becomes the public API for your BIP report, please keep note of this end point as this will be used as Log End Point URL when creating Source in Log Analytics.

Step 2.3 : Configure REST Trigger (GetESSHealthInfo)

Define REST Contract

- HTTP Method: POST

- Media Type: application/json

- Request parameters:

- Response schema:

Step 2.4: Map REST Request to BIP SOAP Request

- Add Map action after trigger

- Source: REST request

- Target: BIP runReport SOAP request

Mapping includes:

- Report Path

- Report Name

- Report Parameters

- Output Format = xml

- P_FROM_TIMESTAMP

- P_TO_TIMESTAMP

- P_REGRESSION_FACTOR

- P_MIN_SUCCESS_RUNS

- P_STUCK_JOB_PROBABILITY

- P_JOB_RISK_SCORE

- P_SLA_BREACH_PROBABILITY

Step 2.5: Invoke BIP SOAP Service

- Add Invoke action

- Use Fusion Applications Adapter defined in prerequisites

- Select BI Publisher Service

- Operation: runReport

SOAP response is returned with:

- reportBytes (Base64 encoded XML)

Step 2.6: Write SOAP XML to Stage File (Opaque Handling)

- Add Map → Stage File (Write)

- Provide:

- File name (dynamic or static)

- Directory

- Map:

- Base64 decoded reportBytes → file content

- Use Opaque Schema XSD, required because

- SOAP payload cannot be directly mapped

- OIC treats it as unstructured XML

- Refer – https://docs.oracle.com/en/cloud/paas/application-integration/integrations-user/use-stage-file-write-operation-opaque-schema.html

Step 2.7: Read Stage File using Structured XSD

- Add Stage File → Read File

- Associate Custom XSD that matches BIP XML structure

- OIC interprets XML as structured data

Step 2.8: Map BIP XML to REST Response

- Add Map action

- Source:

- Stage file read output (XSD-based)

- Target:

- REST response XSD

Step 2.9: Return REST Response

- Final map sends transformed payload back

- REST client receives:

- Clean JSON

Step 2.10: Activate Integration & Test API

- Set Tracking Variables

- Save & Activate Integration

- Integration End Point URL

- Test API in OIC & Postman

3 – OCI Logging Analytics

3.1 – Prerequisites

- OCI tenancy with Log Analytics enabled

- Following IAM Policies are required to enable Log to analytics & allow for Log Collection, you need to setup these policies accordingly to your OCI governance requirements.

- Enable Access to Log Analytics and Its Resources

- Allow Continuous Log Collection Using Management Agents

Process flow – Management Agent ↓ Calls OIC REST API (POST) ↓ OAuth token fetched using Source Credential ↓ JSON parsed by Fusion_ESS_Job_Health_JSON_Parser ↓ Records stored in Log Group ↓ Entity = Fusion_ESS_Job_Health -> Dashboards / Alerts

Follow Quick Links to jump over topics

- Create Management Agent

- Update Management Agent

- Create Log Group

- Create Log Parser

- Create Log Source

- Create Credential File

- Create Entity

- Associate Source to Entity

- Verify

STEP 3.1 – Create Management Agent

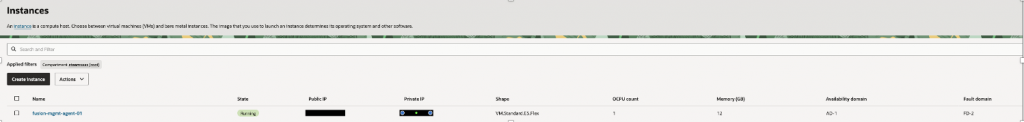

We need to provision compute VM Instance on which Management Agent runs, this Management Agent will eventually poll REST Endpoints for continuous log collection.

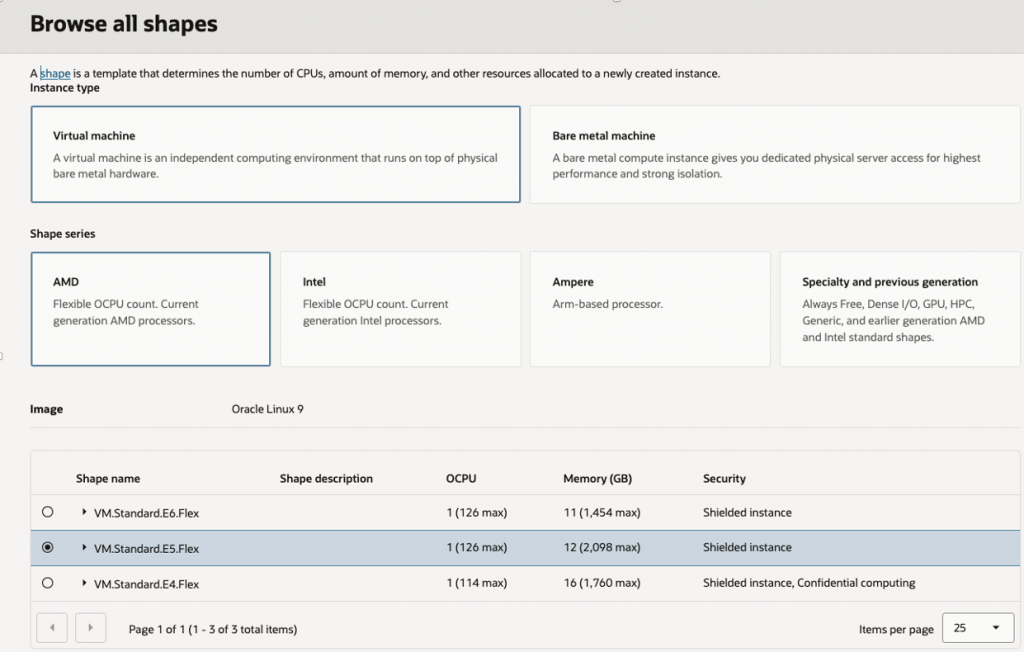

VM Creation

- Name – fusion-mgmt-agent-01 (You can keep name as per your conventions)

- Shape: VM.Standard.E4.Flex

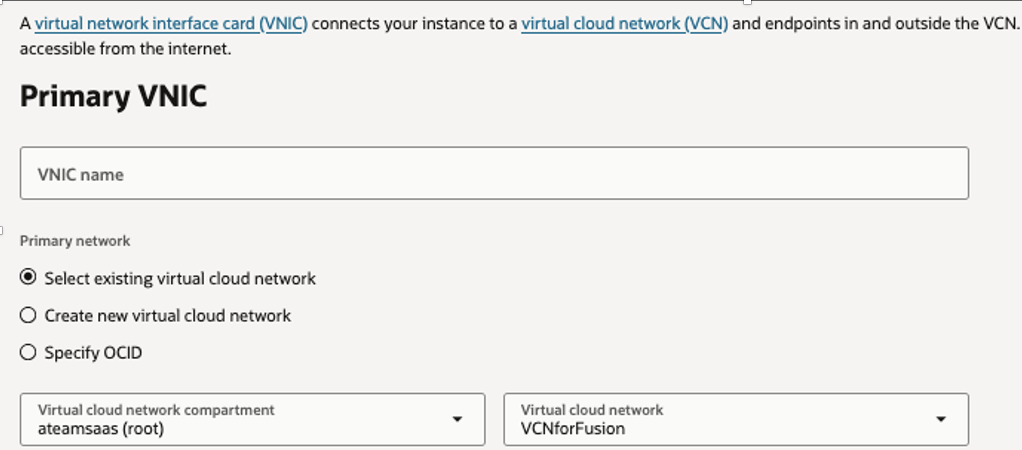

- OS: Oracle Linux 8

- Network:

- Private subnet preferred

- Internet access or NAT required

- Open outbound HTTPS (443)

- Check for Addition of Management Agent.

Follow below Guidance for configuration of VM

- Refer – Generic Prerequisites for Deploying Management Agents >> Operating System Requirements

https://docs.oracle.com/en-us/iaas/management-agents/doc/perform-prerequisites-deploying-management-agents.html - Start with basic & increase as per your workloads, the incremental compute cost is modest .

Refer – https://www.oracle.com/cloud/compute/virtual-machines/pricing/

Compute

- VM.Standard.E5.Flex

- 1–2 OCPUs

- 12–16 GB RAM

- Oracle Linux 9

- Add – Management Agent , Optionally OS Management Hub Agent

Select Management Agent in Oracle Cloud Agent , optionally add – OS Management Hub Agent to Automate Management and monitor updates and patches for the operating system environment on the instance.

In case you choose – OS Management Hub Agent, then you need to choose Profile – Profile which matches with OS details, if it not there you can create new profile

Network – Select VCN which allows Ingress Access to Port 22 for compute SSH access

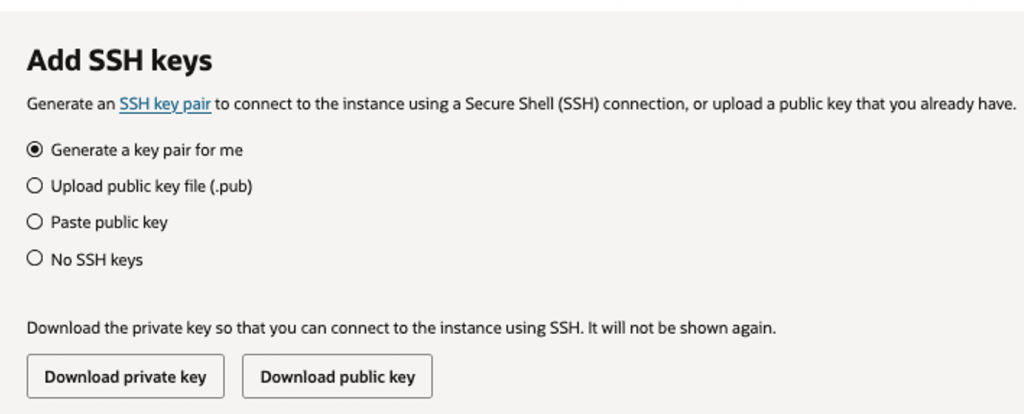

Download Key Pairs so you can use that to connect to compute.

Finally Press – Create Button to submit provision request & wait for instance to be in active state.

Keep Note of Management Agent Name & Public IP, in our case it is – fusion-mgmt-agent-01, this will be used later while creating Entity in next steps.

STEP 3.2 – Update Management Agent

Update management Agent to Enable REST API Collection method.

Open Terminal & SSH to Compute

ssh -i /path/to/your_private_key.pem opc@PUBLIC_IP_OF_COMPUTEValidate Agent is Running, below command should return Active.

systemctl is-active oracle-cloud-agentStarts an interactive Bash shell as the user oracle-cloud-agent

sudo -u oracle-cloud-agent /bin/bashAccess Agent configuration File

cd /var/lib/oracle-cloud-agent/plugins/oci-managementagent/polaris/agent_inst/configEdit Agent Configuration File to Enable REST API Collection.

vi emd.properties Go to Enter Mode in Vi Editor & Paste below lines in End to allows REST API–based log collection

loganalytics.rest_api.enable_oci_api=true

loganalytics.rest_api.report_interval=600Verify If lines are added

grep loganalytics.rest_api emd.propertiesRestart the Management Agent so the change takes effect

sudo systemctl restart oracle-cloud-agent Validate if Agent is Active and running

sudo systemctl status oracle-cloud-agentSTEP 3.3 – Create Log Group

For Logical isolation for ESS monitoring data

OCI Console → Log Analytics

Log Groups → Create

Name: Fusion_ESS_Job_Health_Log_Group

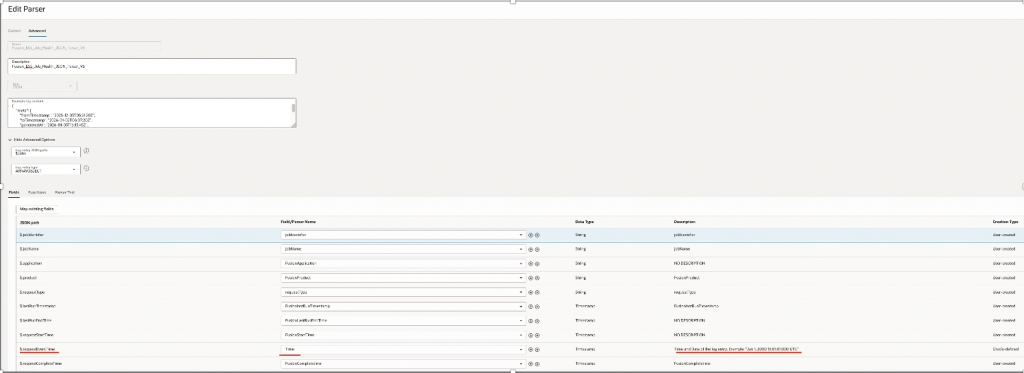

STEP 3.4 – Create Log Parser

Parses ESS Job Health REST API response from OIC

Log Analytics → Administration → Parsers → Create Parser– JSON Parser

| Field | Value |

| Name | Fusion_ESS_Job_Health_JSON_Parser_V5 |

| Type | JSON |

| Example Log Content | Sample JSON Rest Response |

| Log Entry JSON Path | $Jobs |

| Log Entry Type | ARRAYOBJECT |

Sample JSON Rest Response Structure –

REST API response

├── meta ignored by parser

└── jobs[] each element = 1 log entry

├── runtime

├── executionStats

├── alert

└── scheduleNote – Map Attribute “requestEventTime” to Log Analytics Standard Time, this column would be used by log analytics to collect data in incremental way using Time Intervals.

Refer Mapping as below –

| JSON Path | Log Analytics Field | Data Type | Creation Type |

| $.jobIdentifier | jobIdentifier | STRING | User-created |

| $.jobName | jobName | STRING | User-created |

| $.application | FusionApplication | STRING | User-created |

| $.product | FusionProduct | STRING | User-created |

| $.requestType | requestType | STRING | User-created |

| $.requestEventTime | Time | TIMESTAMP | Oracle-defined |

| $.requestStartTime | FusionStartTime | TIMESTAMP | User-created |

| $.requestCompleteTime | FusionCompletetime | TIMESTAMP | User-created |

| $.lastRunTimestamp | FusionlastRunTimestamp | TIMESTAMP | User-created |

| $.lastRunEndTime | FusionLastRunEndTime | TIMESTAMP | User-created |

| $.lastSuccessTimestamp | FusionlastSuccessTimestamp | TIMESTAMP | User-created |

| $.runtime.lastRuntimeMinutes | lastRuntimeMinutes | DOUBLE | User-created |

| $.runtime.previousRuntimeMinutes | previousRuntimeMinutes | DOUBLE | User-created |

| $.runtime.avgRuntimeBaselineMinutes | avgRuntimeBaselineMinutes | DOUBLE | User-created |

| $.runtime.p95RuntimeBaselineMinutes | p95RuntimeBaselineMinutes | DOUBLE | User-created |

| $.runtime.runtimeVolatilityIndex | runtimeVolatilityIndex | DOUBLE | User-created |

| $.runtime.runtimeTrendSlopePerRun | runtimeTrendSlopePerRun | DOUBLE | User-created |

| $.runtime.runtimeRegressionStatus | runtimeRegressionStatus | STRING | User-created |

| $.executionStats.totalRuns | totalRuns | LONG | User-created |

| $.executionStats.succeededRuns | succeededRuns | LONG | User-created |

| $.executionStats.failedRuns | failedRuns | LONG | User-created |

| $.executionStats.runningRuns | runningRuns | LONG | User-created |

| $.executionStats.blockedRuns | blockedRuns | LONG | User-created |

| $.executionStats.cancelledRuns | cancelledRuns | LONG | User-created |

| $.executionStats.warningRuns | warningRuns | LONG | User-created |

| $.executionStats.adhocCount | adhocCount | LONG | User-created |

| $.executionStats.scheduledCount | scheduledCount | LONG | User-created |

| $.executionStats.queuePressure | queuePressure | LONG | User-created |

| $.executionStats.avgRuntimeMinutes | avgRuntimeMinutes | DOUBLE | User-created |

| $.executionStats.stddevRuntimeMinutes | stddevRuntimeMinutes | DOUBLE | User-created |

| $.failureMetrics.failuresLast24Hours | failuresLast24Hours | LONG | User-created |

| $.failureMetrics.failuresLast7Days | failuresLast7Days | LONG | User-created |

| $.failureMetrics.predictedFailureProbability | predictedFailureProbability | DOUBLE | User-created |

| $.slaMetrics.slaBreachProbability | slaBreachProbability | DOUBLE | User-created |

| $.runningState.currentlyRunningCount | currentlyRunningCount | LONG | User-created |

| $.runningState.maxRunningDurationHours | maxRunningDurationHours | DOUBLE | User-created |

| $.runningState.stuckJobProbability | stuckJobProbability | DOUBLE | User-created |

| $.runningState.longestRunningRequestId | longestRunningRequestId | STRING | User-created |

| $.confidence.confidenceLevel | confidenceLevel | STRING | User-created |

| $.confidence.confidenceScoreWeighted | confidenceScoreWeighted | DOUBLE | User-created |

| $.risk.jobRiskScore | jobRiskScore | DOUBLE | User-created |

| $.schedule.scheduleStartTime | scheduleStartTime | TIMESTAMP | User-created |

| $.schedule.scheduleEndTime | scheduleEndTime | TIMESTAMP | User-created |

| $.schedule.scheduleFrequency | scheduleFrequency | STRING | User-created |

| $.schedule.scheduleInterval | scheduleInterval | LONG | User-created |

| $.schedule.scheduleEndedFlag | scheduleEndedFlag | STRING | User-created |

| $.schedule.scheduleOverdueFlag | scheduleOverdueFlag | STRING | User-created |

| $.schedule.highFrequencyScheduleFlag | highFrequencyScheduleFlag | STRING | User-created |

| $.requests.lastRequestId | lastRequestId | LONG | User-created |

| $.requests.lastSuccessRequestId | lastSuccessRequestId | LONG | User-created |

| $.requests.lastFailedRequestId | lastFailedRequestId | LONG | User-created |

| $.requests.RequestId | FusionRequestID | LONG | User-created |

| $.requests.parentRequestId | FusionParentRequestID | LONG | User-created |

| $.requests.processGroup | FusionProcessGroup | STRING | User-created |

| $.requests.submitter | FusionSubmitter | STRING | User-created |

| $.alert.alertFlag | alertFlag | STRING | User-created |

| $.alert.alertSeverity | JobAlertSeverity | STRING | User-created |

| $.alert.alertReason | alertReason | STRING | User-created |

| $.additionalInfo.attributeName1 | attributeName1 | STRING | User-created |

| $.additionalInfo.attributeVal1 | attributeVal1 | STRING | User-created |

| $.additionalInfo.attributeName2 | attributeName2 | STRING | User-created |

| $.additionalInfo.attributeVal2 | attributeVal2 | STRING | User-created |

| $.additionalInfo.attributeName3 | attributeName3 | STRING | User-created |

| $.additionalInfo.attributeVal3 | attributeVal3 | STRING | User-created |

| $.additionalInfo.attributeName4 | attributeName4 | STRING | User-created |

| $.additionalInfo.attributeVal4 | attributeVal4 | STRING | User-created |

| $.additionalInfo.attributeName5 | attributeName5 | STRING | User-created |

| $.additionalInfo.attributeVal5 | attributeVal5 | STRING | User-created |

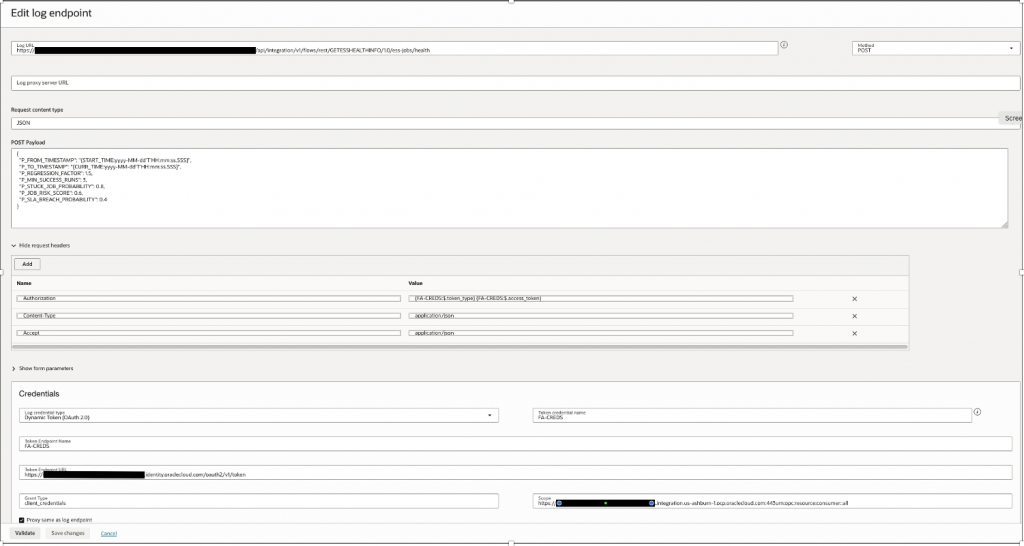

STEP 3.5 – Create Log Source

We will Create Logging Analytics REST Source.

Refer – How to set Credentials, Time Parameters as bind variable, refer –

https://docs.oracle.com/en-us/iaas/log-analytics/doc/rest-api-log-collection.html

Log Analytics → Administration → Sources → Create Source

| Field | Value |

| Name | Fusion_ESS_Job_Health_Source_V30 |

| Source Type | REST API |

| Entity Types | OIC Integration |

| Parser | Fusion_ESS_Job_Health_JSON_Parser_V5 |

Log End Point

Log URL – OIC API End point, refer Step 2.2 : Create App Driven Integration

For Example – https://<OICURL>/ic/api/integration/v1/flows/rest/GETESSHEALTHINFO/1.0/ess-jobs/health

Credentials

| Field | Value |

| Log Credentials Type | Dynamic Token(OAuth2.0) |

| Token End Point Name | FA-CREDS |

| Token End Point URL | https://<idcsURL>/oauth2/v1/token |

| Grant Type | client_credentials |

| Scope | https://<OICURL>:urn:opc:resource:consumer::all |

Method – POST

Request Payload –

Here in P_FROM_TIMESTAMP & P_TO_TIMESTAMP, we are passing Log analytics standard Macros – START_TIME & CURR_TIME with precision of millisecond in date format, as many ESS Jobs would complete within same second however may exceed in milliseconds.

Therefore for accuracy & to account for any overlaps – we don’t want same Jobs data to be collected in next runs, millisecond precision is important, please refer how START_TIME is computed, this is very important to understand how incremental data is collected over each collection runs with moving time window.

The default value of START_TIME is 30 days, that is, the value of the timestamp is 30 days earlier than the current timestamp.

For remaining parameters please refer 1.2 – Query Parameters Section, how they are set as per requirements.

{

"P_FROM_TIMESTAMP": "{START_TIME:yyyy-MM-dd'T'HH:mm:ss.SSS}",

"P_TO_TIMESTAMP": "{CURR_TIME:yyyy-MM-dd'T'HH:mm:ss.SSS}",

"P_REGRESSION_FACTOR": 1.5,

"P_MIN_SUCCESS_RUNS": 3,

"P_STUCK_JOB_PROBABILITY": 0.8,

"P_JOB_RISK_SCORE": 0.6,

"P_SLA_BREACH_PROBABILITY": 0.4

}

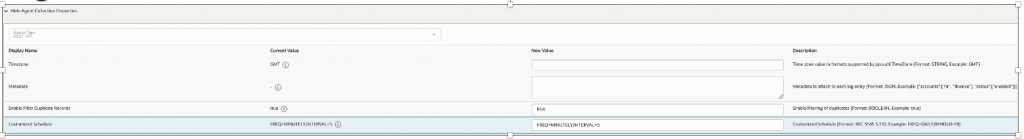

Agent Collection Properties

Set Duplicate as True – This will remove any duplicates collected over time.

Scheduler as 5 minutes or 10 Minutes per your requirements, should not exceed large time intervals for good near real time monitoring & low data volumne.

STEP 3.6 – Create Credential File for REST Access

This is additional step where OAuth2.0 Confidential App Client ID & Secret is saved as credentials inside management agent , as we don’t want them to be exposed over UI.

This is OAuth2.0 Client Confidential App configured to access OIC End points securely.

Refer https://docs.oracle.com/en/cloud/paas/application-integration/integrations-user/protect-integrations-rest-endpoint-oauth.html

Configure OAuth2.0 credential file on agent, Open Terminal & SSH to Compute

ssh -i /path/to/your_private_key.pem opc@PUBLIC_IP_OF_COMPUTECreate FA-CREDS.json

vi /tmp/FA-CREDS.jsonAdd below Information, change value of HTTPSUserName & HTTPSPassword for OAuth2.0 Client ID and Secret.

[

{

"source": "lacollector.la_rest_api",

"name": "FA-CREDS",

"type": "HTTPSBasicAuthCreds",

"description": "These are HTTPS (BasicAuth) credentials.",

"properties":

[

{

"name": "HTTPSUserName",

"value": "oauth2.0clientid"

},

{

"name": "HTTPSPassword",

"value": "oauth2.0clientsecret""

}

]

}

]Upload Credentials –

cat /tmp/FA-CREDS.json | sudo -u oracle-cloud-agent /var/lib/oracle-cloud-agent/plugins/oci-managementagent/polaris/agent_inst/bin/credential_mgmt.sh -o upsertCredentials -s loganIt should return with message – Credentials Uploaded.

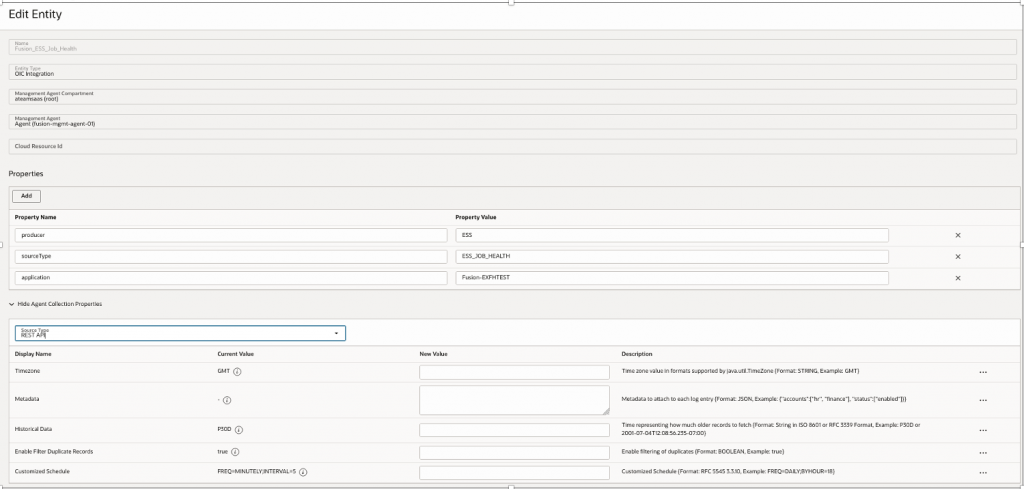

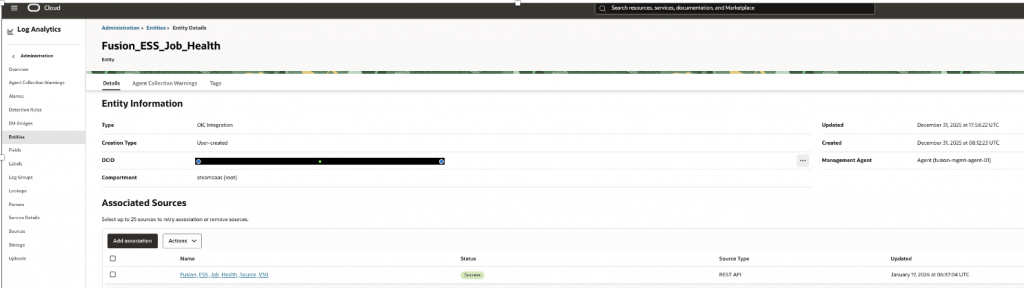

STEP 3.7 – Create Entity

Entity sits over Management Agent & Associate with Source to pull data

| Property Name | Property Value |

| Name | Fusion_ESS_Job_Health |

| Entity Tyoe | OIC Integration |

| Management Agent | Fusion-mgmt-agent-01 |

| Source Type | REST API |

| >>Add New Properties | |

| Producer | ESS |

| SourceType | ESS_JOB_HEALTH |

| application | FusionInstanceName |

STEP 3.8 – Associate Source to Entity

Go to Entity And Press Button – Add Association, to add Source to Entity.

STEP 3.9 – Verify if Entity has started polling REST API

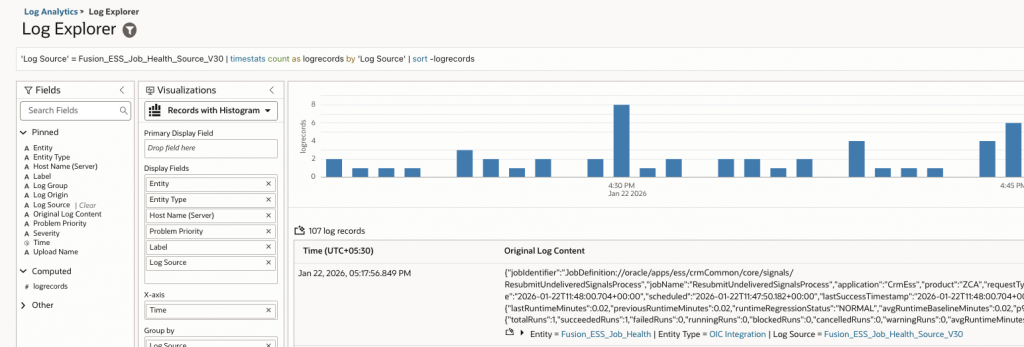

Go to Log Explorer & execute below search Search Query, data should start flowing in sometime, usually as per polling frequency (ie..5 Minutes in our case)

'Log Source' = Fusion_ESS_Job_Health_Source_V30 | timestats count as logrecords by 'Log Source' | sort -logrecords

If data doesn’t flows into then you might need to troubleshoot, if credentials are working or for any other failures, check for any Management Agent log collection warning on source or entity page.

https://docs.oracle.com/en-us/iaas/log-analytics/doc/view-agent-collection-warnings.html

4 – Create Widgets & OCI Dashboards

Once data start flowing in Log analytics , we can start building Widgets.

Below are widgets used for building OCI Dashboard – ESS Job Health & Risk Observatory that tracks for health, failures, runtime trends, risks & shows Unified operational, statistical, and predictive visibility into Fusion ESS jobs, combining windowed execution signals with long-term behavioral risk indicators.

Once these Widgets are created , they can be saved over OCI Dashboard.

OCI Logging Analytics dashboards support automatic refresh at configurable intervals.You can set the refresh interval (e.g., every 1 min, 5 min, 15 min) depending on your log ingestion frequency.

Build Widgets as Saved Query – Visualize Data Using Charts and Controls

https://docs.oracle.com/en-us/iaas/log-analytics/doc/visualize-data-using-charts-controls.html

Below widgets were developed to build ESS Job Health & Risk Observatory, you can use Log queries to build same and covert them into OCI Dashboard.

| Widget | Log Query | Visualizations | Descriptions | |

| 1 | ESS_TotalJobRuns | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats sum(totalRuns) as TotalJobRuns | Tile | Total Number or Jobs in Given Time |

| 2 | ESS_Accuracy_Rate | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats sum(succeededRuns) as success, sum(warningRuns) as warning, sum(totalRuns) as total | eval ESS_Accuracy_Rate = round((success * 1 + warning * 0.7) / total * 100, 2) | stats max(ESS_Accuracy_Rate) as ESS_Accuracy_Rate | Tile | How reliably and predictably are my ESS jobs executing? |

| 3 | ESS_FailuresRate | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats sum(failedRuns) as failed, sum(totalRuns) as total | eval ESS_Failure_Rate = round((failed * 1) / total * 100, 2) | stats max(ESS_Failure_Rate) as ESS_Failure_Rate | Tile | % of Jobs Failing |

| 4 | ESS_Execution_Outcome_Summary | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats sum(succeededRuns) as success, sum(failedRuns) as failed, sum(cancelledRuns) as cancelled, sum(warningRuns) as warning, sum(runningRuns) as running, sum(blockedRuns) as blocked, sum(queuePressure) as queue | Bar | Aggregates execution results across all jobs. Why it matters • Reliability posture • CIS: failure monitoring |

| 5 | ESS_LatestRuntimeSnapShotTrend | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | timestats avg(lastRuntimeMinutes) as avgRuntime | Line | Shows average runtime evolution over time. Why it matters • Capacity & performance planning • Early degradation visibility |

| 6 | ESS_FailureSignals | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where failedRuns > 0 | timestats sum(failedRuns) AS failureEvents | Line | How many job failures occurred per time bucket? |

| 7 | ESS_Job_Alerts | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where alertReason != ” | stats count as alertCount by alertReason | Pie | Shows why alerts are firing Helps justify predictive model to stakeholders |

| 8 | ESS_TopJobs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats sum(totalRuns) AS runs BY jobName | sort -runs | head 10 | Bar | Which jobs run the most (volume)? Ranks ESS jobs by total execution volume within the selected time range. |

| 9 | ESS_TopSuccessfullJobs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats sum(succeededRuns) as succeededRuns, sum(totalRuns) as totalRuns by jobName | eval successPct = (succeededRuns * 100) / totalRuns | where successPct >= 99 | sort -successPct | head 10 | Bar | Highlights ESS jobs with consistently high success rates |

| 10 | ESS_TopFailingJobs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats sum(failedRuns) as failedRuns, sum(totalRuns) as totalRuns by jobName | eval errorRate = (failedRuns * 100) / totalRuns | where totalRuns > 0 | where errorRate > 1 | sort -errorRate | head 20 | Bar | Jos which are failing , calculated bby Error Runs/Total Runs |

| 11 | ESS_Top10RiskyJobs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats max(jobRiskScore) as jobRiskScore, max(slaBreachProbability) as slaRisk, max(predictedFailureProbability) as predictedFailureRisk, max(runtimeVolatilityIndex) as volatility by jobName, jobIdentifier | sort -jobRiskScore | top 10 jobRiskScore | Horizontal Bar | Show Top 10 Risky Jobs , Counts jobs with composite jobRiskScore , derived from: • Failure probability • SLA risk • Runtime volatility • Trend slope • Stuck probability |

| 12 | ESS_Longest_Running_Jobs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats max(lastRuntimeMinutes) as runtime by jobName | sort -runtime | top 10 runtime | Bar | Identifies jobs with highest runtime. Why it matters • Bottleneck analysis • Capacity planning |

| 13 | ESS_High_Frequency_Scheduled_Jobs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where highFrequencyScheduleFlag = Y | stats sum(totalRuns) as totalRuns by jobName | sort -totalRuns | Horizontal Bar | Shows Jobs which are Scheduled very Frequently |

| 14 | ESS_High_Volume_Runs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where totalRuns > 10000 | stats sum(totalRuns) as totalRuns by jobName | sort -totalRuns | Sunburst | Shows jobs with: • 20k+ runs • MEDIUM confidence • Low visible failures These are latent risks, Small defect × massive volume = systemic outage |

| 15 | ESS_Runtime_Volatility | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats avg(runtimeVolatilityIndex) as volatility by jobName | top 10 volatility | Horizontal Bar | Identifies jobs with unstable runtimes using: runtimeVolatilityIndex = stddev / mean Why it matters • Predicts failure likelihood • CIS: anomaly detection |

| 16 | ESS_Jobs_LikelytoFail | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where predictedFailureProbability >= 0.1 | stats max(predictedFailureProbability) as predictedFailureProbability by jobName | sort -predictedFailureProbability | top 10 predictedFailureProbability | Horizontal Bar | Top ten Jobs which are Likely to Fail with predictedFailureProbability >= 0.1 |

| 17 | ESS_SLA_Risk_Jobs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where slaBreachProbability >= 0.2 | stats max(slaBreachProbability) as slaBreachProbability, max(avgRuntimeMinutes) as avgRuntimeMinutes, max(p95RuntimeBaselineMinutes) as p95Baseline by jobName, jobIdentifier | sort -slaBreachProbability | Bar | Avg SLA risk per job. |

| 18 | ESS_Silent_Risk_Jobs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where failuresLast24Hours = 0 | where jobRiskScore >= 0.6 or slaBreachProbability >= 0.4 | stats max(jobRiskScore) as jobRiskScore, max(slaBreachProbability) as slaBreachProbability by jobName | sort -jobRiskScore, slaBreachProbability | Bar | Jobs showing high predicted risk or SLA breach probability despite zero recent failures |

| 19 | ESS_Runtime_vs_P95 | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats avg(lastRuntimeMinutes) as actual, avg(p95RuntimeBaselineMinutes) as baseline by jobName | eval deviation = actual – baseline | sort -deviation | Bar | Compares actual runtime vs historical P95 baseline. Why it matters • SLA drift detection • CIS: performance thresholds |

| 20 | ESS_Jobs_By_Application | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats count by FusionApplication | sort -Count | Horizontal Bar | Aggregates jobs by Fusion application/module. |

| 21 | ESS_Jobs_By_Submitter | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats count as runs by FusionSubmitter | sort -runs | Horizontal Bar | • Identifies: o Noisy service accounts o Rogue integrations o Overactive modules • CIS relevance: accountability & traceability of automated jobs |

| 22 | ESS_Jobs_By_ProcessGroups | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats count by FusionProcessGroup | Pie | • Shows ESS cluster pressure • Helps infra teams correlate: o CPU spikes o JVM GC o SOA congestion |

| 23 | ESS_Jobs_ScheduledvsAdhoc | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | stats sum(scheduledCount) as SCHEDULED, sum(adhocCount) as ADHOC | Bar | Why it matters • Detects uncontrolled ad-hoc usage • Supports governance & change management |

| 24 | ESS_Risk_Distribution | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | eval riskBucket = if(jobRiskScore >= 0.75, High, if(jobRiskScore >= 0.6, Medium, if(jobRiskScore >= 0.4, Low, ‘Very Low’))) | where failuresLast24Hours > 0 or failuresLast7Days > 0 | stats count as jobCount, avg(slaBreachProbability) as avgSlaRisk, avg(runtimeVolatilityIndex) as avgVolatility by riskBucket | Bar | This chart shows where our ESS job risk is concentrated, and whether that risk is driven by instability or SLA exposure, allowing us to focus remediation where it matters most. |

| 25 | ESS_Runtime_Regression_Drivers | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | eval regressionDriver = if(succeededRuns < 3, ‘Insufficient History’, runtimeVolatilityIndex > 1, ‘High Runtime Variance’, runtimeTrendSlopePerRun > 0, ‘Upward Runtime Trend’, lastRuntimeMinutes > previousRuntimeMinutes, ‘Recent Runtime Spike’, Stable) | stats count as jobCount by runtimeRegressionStatus, regressionDriver | sort runtimeRegressionStatus, -jobCount | Bar | This explains why jobs are NORMAL vs REGRESSED vs INSUFFICIENT. |

| 26 | ESS_Runtime_Regression_Distribution | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | fields jobName, runtimeRegressionStatus, succeededRuns, lastRuntimeMinutes, previousRuntimeMinutes, runtimeVolatilityIndex, runtimeTrendSlopePerRun, jobRiskScore | stats count as jobCount, avg(succeededRuns) as avgSucceededRuns, avg(lastRuntimeMinutes) as avgLastRuntime, avg(previousRuntimeMinutes) as avgPreviousRuntime, avg(runtimeVolatilityIndex) as avgVolatility, avg(runtimeTrendSlopePerRun) as avgTrendSlope, avg(jobRiskScore) as avgRiskScore by runtimeRegressionStatus | sort -jobCount | Bar | Classifies jobs as NORMAL / RUNTIME_REGRESSION based on: lastRuntime > previousRuntime × regressionFactor Why it matters • Detects performance decay • CIS: baseline deviation monitoring |

| 27 | ESS_Confidence_Distribution | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | fields jobName, confidenceLevel, succeededRuns, runtimeVolatilityIndex, runtimeTrendSlopePerRun, jobRiskScore | stats count as jobCount, avg(succeededRuns) as avgRuns, avg(runtimeVolatilityIndex) as avgVolatility, avg(jobRiskScore) as avgRisk by confidenceLevel | eval confidenceOrder = if(confidenceLevel = HIGH, 1, if(confidenceLevel = MEDIUM, 2, 3)) | sort confidenceOrder | fields -confidenceOrder | Bar | Distribution of model confidence. |

| 28 | ESS_Confidence_Gap_Jobs | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where confidenceLevel = “LOW” | fields jobName, succeededRuns, runtimeVolatilityIndex, runtimeTrendSlopePerRun | Bar | Identifies jobs where alerting and predictions are unreliable due to insufficient run history or unstable metrics |

| 29 | ESS_Job_Activity_Heatmap | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | timestats sum(totalRuns) as executions by jobName | Heatmap | Which jobs are active, and when? Shows when each ESS job had activity signals over time. Each cell indicates the presence of job execution activity in that time bucket, not the exact run count. |

| 30 | ESS_Active_Alerts | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where alertFlag = Y | fields alertFlag, JobAlertSeverity, alertReason, jobName, jobIdentifier, FusionApplication, FusionProduct, requestType, confidenceLevel, jobRiskScore, predictedFailureProbability, slaBreachProbability, stuckJobProbability, runtimeRegressionStatus, failuresLast24Hours, failuresLast7Days, lastRunTimestamp, FusionLastRunEndTime, FusionlastRunTimestamp, runningRuns, maxRunningDurationHours, FusionRequestID, lastRequestId, lastFailedRequestId, lastSuccessRequestId, longestRunningRequestId, scheduleOverdueFlag, highFrequencyScheduleFlag, -Entity, -‘Entity Type’, -‘Host Name (Server)’, -‘Problem Priority’, -Label, -‘Log Source’ | Table | Lists all jobs currently in alert state. Why it matters • SOC / Ops action list • CIS: incident detection |

| 31 | ESS_Job_Detail_Table | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | fields jobName, FusionApplication, FusionProduct, requestType, FusionSubmitter, alertFlag, alertReason, JobAlertSeverity, lastRequestId, lastSuccessRequestId, lastFailedRequestId, failuresLast24Hours, failuresLast7Days, lastRuntimeMinutes, FusionlastRunTimestamp, FusionlastSuccessTimestamp, confidenceLevel, jobRiskScore, slaBreachProbability, stuckJobProbability, runtimeRegressionStatus, runtimeVolatilityIndex, runtimeTrendSlopePerRun | fields -Entity, -‘Entity Type’, -‘Host Name (Server)’, -‘Problem Priority’, -Label, -‘Log Source’ | Table | Single pane of glass for investigation. Why it matters • Root cause analysis • CIS: audit & traceability |

| 32 | ESS_Active_Alerts_DetectionRule | Log Source’ = Fusion_ESS_Job_Health_Source_V30 | where alertFlag = Y | stats count(alertFlag) as alertCount by jobName, JobAlertSeverity | TreeMap | Used For Detection Rule – FusionESSActiveAlertsDetectionRule |

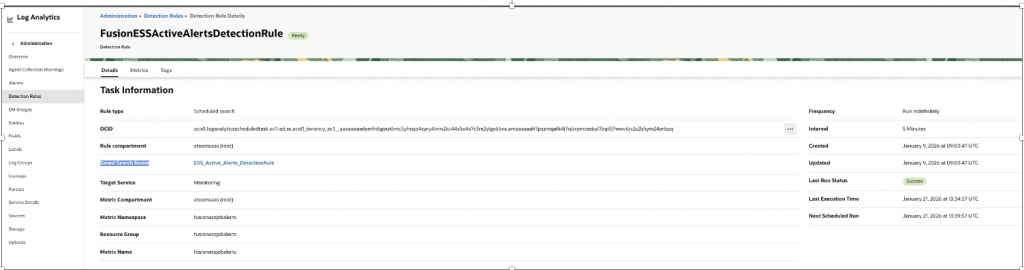

5 – Create Detection Rules

Use Saved Search – ESS_Active_Alerts_DetectionRule to build Detection rule which will create metrics in OCI Monitoring Service & eventually used in OCI Alarm.

| Property Name | Property Value |

| Name | FusionESSActiveAlertsDetectionRule |

| Rule Type | Scheduled search |

| Target Service | Monitoring |

| MetricNamespace | fusionessjobalerts |

| Resource Group | fusionessjobalerts |

| Metric Name | fusionessjobalerts |

Similarly, you can create Detection rule for any relevant Event you wanted to be notified using above Widgets(Saved Search) or you can create your own, as for Eg..

- slaBreachProbability >= 0.4

- failuresLast24Hours = 0 AND (jobRiskScore >= 0.6 OR slaBreachProbability >= 0.4)

- For Monitor Failure of any particular critical ESS Job (jobName = ‘<JobName>’)

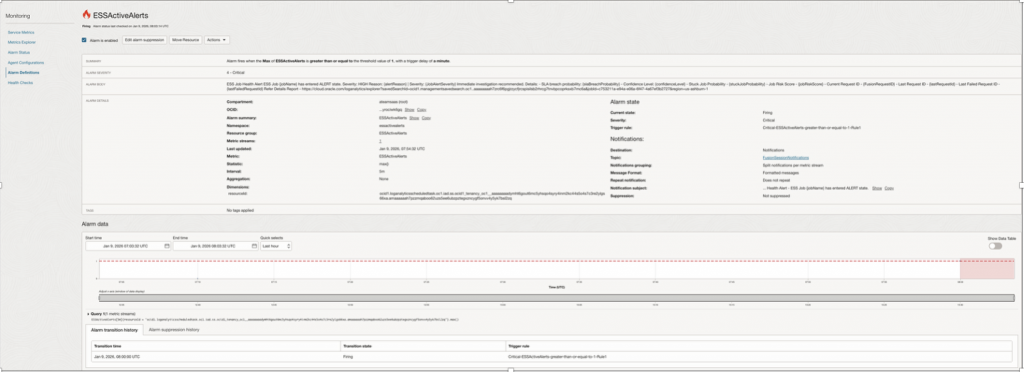

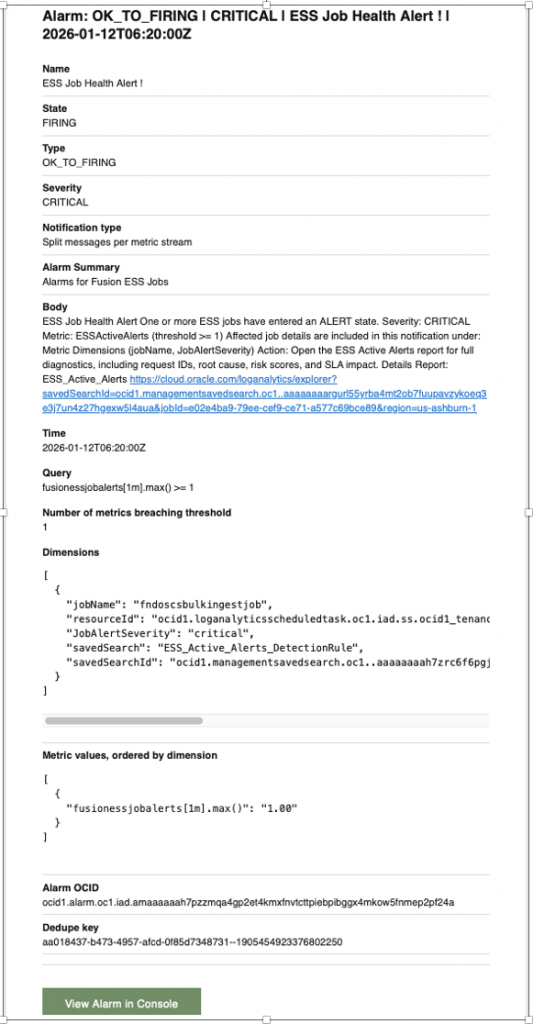

6 – Create OCI Alarms

Build Alarms using Alert Flag which captures various health parameters as

– Runtime regression

– Failure spikes

– Missed critical jobs

| Property Name | Property Value |

| Alarm Name | ESSActiveAlerts |

| MetricNamespace | fusionessjobalerts |

| Resource Group | fusionessjobalerts |

| Metric Name | fusionessjobalerts |

| Interval | 1 Minute |

| Statistics | Max |

| Trigger Rule 1 – Operator (greater than or equal to ) | 1 Minute |

| Trrigger delay | 1 Minute |

| Alarm Severity | Critical |

| Alarm Body | ESS Job Health Alert One or more ESS jobs have entered an ALERT state. Severity: CRITICAL Metric: ESSActiveAlerts (threshold >= 1) Affected job details are included in this notification under: Metric Dimensions (jobName, JobAlertSeverity) Action: Open the ESS Active Alerts report for full diagnostics, including request IDs, root cause, risk scores, and SLA impact. Details Report: ESS_Active_Alerts <URL of Widget – ESS_Active_Alerts > |

| Destination Service | Notifications |

| Topic | <Topic created for Notification> |

| Notification Subject | ESS Job Health Alert ! |

| Message grouping | Split Notifications Per metric |

| Message format | Send formatted message |

Alarm Notifications –

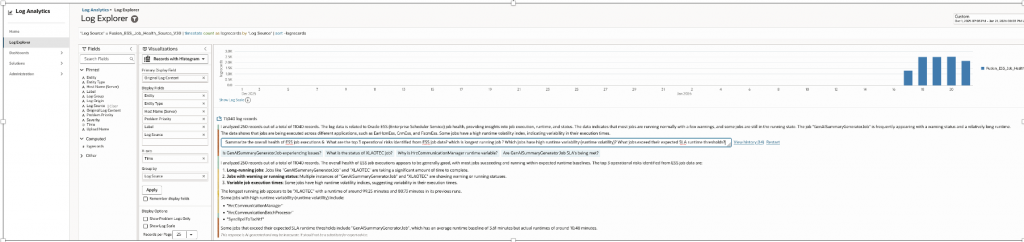

7 – Analyze data using LoganAI

AI analysis using LoganAI can be done over collected ESS Job data from Log Explorer.

Traditional queries tell us what happened. Logan AI lets us ask why it happened, what will happen next, and where to focus directly on Fusion ESS operational data

Search Query –

'Log Source' = Fusion_ESS_Job_Health_Source_V30 | timestats count as logrecords by 'Log Source' | sort -logrecordsSample Questions –

- Summarize the overall health of ESS job executions

- What are the top 3 operational risks identified from ESS job data?

- Which is longest running job ?

- Which jobs have high runtime variability (runtime volatility)?

- What jobs exceed their expected SLA runtime thresholds?

- Which Fusion application generates the most ESS job executions (HCM, ERP, SCM)?

- Are there early warning indicators before job failures occur?

- Which jobs should be proactively monitored more closely?

Conclusion

The ESS Job Health & Risk Observatory represents an industry-grade monitoring solution. By combining BI-driven analytics, OCI Log analytics observability & AI features (LoganAI), it shifts ESS monitoring from reactive reporting to proactive risk management.

This design aligns with SRE best practices and scales with enterprise complexity.

This solution turns ESS from a black box into a predictable, measurable, and governable system, it converts raw ESS execution data into actionable operational intelligence.

This is not just monitoring – it is predictive analytics:

- Failure Probability – Predicts likelihood of next-run failure with low noise

- Runtime Regression Detection – Identifies jobs slowing down vs historical P95 baselines

- Trend Slope Analysis – Detects long-term degradation before outages occur

- Composite Job Risk Scoring – Single score combining failures, SLA risk, volatility, and trend

- Explainable Alerts – Every alert includes why it fired (failure, SLA risk, regression, stuck)

We are no longer asking why did this fail?

We are asking what will fail next, and why?

References

- Oracle MOS: Sample SQL Queries for Monitoring ESS (Doc ID 2820161.1)

- Oracle ESS Scheduler REST API Documentation

- Fusion Apps Observability by Collecting Enterprise Scheduler ESS Logs Using OCI Logging Analytics REST API Ingestion

- Creating Innovative User Experience for Fusion Cloud Applications Scheduled Processes

Reference Code Artifacts

BI Data Model Query

SELECT

/* =========================

Job FIELDS FOR API

========================= */

Q.JOB_IDENTIFIER,

Q.JOB_NAME,

Q.APPLICATION,

Q.PRODUCT,

Q.REQUEST_TYPE,

Q.LAST_RUN_TIMESTAMP,

Q.LAST_RUN_END_TIME,

Q.REQUEST_START_TIME,

Q.REQUEST_EVENT_TIME,

Q.REQUEST_COMPLETE_TIME,

Q.REQUESTID,

Q.PARENTREQUESTID,

Q.PROCESSGROUP,

Q.SUBMITTER,

Q.SCHEDULED,

--

Q.LAST_SUCCESS_TIMESTAMP,

Q.LAST_RUNTIME_MINUTES,

Q.PREVIOUS_RUNTIME_MINUTES,

Q.RUNTIME_REGRESSION_STATUS,

--

Q.AVG_RUNTIME_BASELINE_MIN,

Q.P95_RUNTIME_BASELINE_MIN,

--

Q.FAILURES_LAST_24_HOURS,

Q.FAILURES_LAST_7_DAYS,

--

Q.CURRENTLY_RUNNING_COUNT,

Q.MAX_RUNNING_DURATION_HOURS,

--

Q.TOTAL_RUNS,

Q.FAILED_RUNS,

Q.SUCCEEDED_RUNS,

Q.RUNNING_RUNS,

Q.BLOCKED_RUNS,

Q.CANCELLED_RUNS,

Q.WARNING_RUNS,

Q.queue_pressure,

--

Q.AVG_RUNTIME_MINUTES,

Q.STDDEV_RUNTIME_MINUTES,

--

Q.PREDICTED_FAILURE_PROBABILITY,

Q.SLA_BREACH_PROBABILITY,

Q.RUNTIME_VOLATILITY_INDEX,

Q.RUNTIME_TREND_SLOPE_PER_RUN,

Q.STUCK_JOB_PROBABILITY,

--

Q.CONFIDENCE_LEVEL,

Q.CONFIDENCE_SCORE_WEIGHTED,

Q.JOB_RISK_SCORE,

--

Q.SCHEDULE_START_TIME,

Q.SCHEDULE_END_TIME,

Q.SCHEDULE_FREQUENCY,

Q.SCHEDULE_INTERVAL,

Q.SCHEDULE_ENDED_FLAG,

Q.SCHEDULE_OVERDUE_FLAG,

Q.HIGH_FREQUENCY_SCHEDULE_FLAG,

--

Q.LAST_REQUEST_ID,

Q.LAST_SUCCESS_REQUEST_ID,

Q.LAST_FAILED_REQUEST_ID,

Q.LONGEST_RUNNING_REQUEST_ID,

--

Q.ADHOC_COUNT ,

Q.SCHEDULE_COUNT ,

/* =========================

META FIELDS FOR API

========================= */

TO_CHAR(

TO_TIMESTAMP_TZ(:P_FROM_TIMESTAMP,'YYYY-MM-DD"T"HH24:MI:SS.FF3'),

'YYYY-MM-DD"T"HH24:MI:SS"Z"'

) AS META_FROM_TIMESTAMP,

TO_CHAR(

TO_TIMESTAMP_TZ(:P_TO_TIMESTAMP,'YYYY-MM-DD"T"HH24:MI:SS.FF3'),

'YYYY-MM-DD"T"HH24:MI:SS"Z"'

) AS META_TO_TIMESTAMP,

TO_CHAR(

SYSTIMESTAMP AT TIME ZONE 'UTC',

'YYYY-MM-DD"T"HH24:MI:SS"Z"'

) AS META_GENERATED_AT,

COUNT(1) OVER () AS META_RECORD_COUNT,

/* Alert decision */

CASE

/* HARD SIGNALS */

WHEN Q.FAILURES_LAST_24_HOURS > 0 THEN 'Y'

WHEN Q.STUCK_JOB_PROBABILITY >= NVL(:P_STUCK_JOB_PROBABILITY,0.8) THEN 'Y'

WHEN Q.SCHEDULE_OVERDUE_FLAG = 'Y' THEN 'Y'

/* SOFT / PREDICTIVE SIGNALS */

WHEN Q.CONFIDENCE_LEVEL IN ('MEDIUM', 'HIGH')

AND Q.RUNTIME_REGRESSION_STATUS = 'RUNTIME_REGRESSION'

THEN 'Y'

WHEN Q.CONFIDENCE_LEVEL IN ('MEDIUM', 'HIGH')

AND Q.JOB_RISK_SCORE >= NVL(:P_JOB_RISK_SCORE,0.6)

THEN 'Y'

WHEN Q.CONFIDENCE_LEVEL IN ('MEDIUM', 'HIGH')

AND Q.SLA_BREACH_PROBABILITY >= NVL(:P_SLA_BREACH_PROBABILITY,0.4)

THEN 'Y'

ELSE 'N'

END AS ALERT_FLAG,

-- Alert Reason

CASE

WHEN

(

Q.FAILURES_LAST_24_HOURS > 0

OR Q.STUCK_JOB_PROBABILITY >= NVL(:P_STUCK_JOB_PROBABILITY,0.8)

OR Q.SCHEDULE_OVERDUE_FLAG = 'Y'

OR (

Q.CONFIDENCE_LEVEL IN ('MEDIUM','HIGH')

AND (

Q.RUNTIME_REGRESSION_STATUS = 'RUNTIME_REGRESSION'

OR Q.JOB_RISK_SCORE >= NVL(:P_JOB_RISK_SCORE,0.6)

OR Q.SLA_BREACH_PROBABILITY >= NVL(:P_SLA_BREACH_PROBABILITY,0.4)

)

)

)

THEN

CASE

WHEN Q.FAILURES_LAST_24_HOURS > 0 THEN 'FAILURE'

WHEN Q.STUCK_JOB_PROBABILITY >= NVL(:P_STUCK_JOB_PROBABILITY,0.8) THEN 'STUCK'

WHEN Q.SCHEDULE_OVERDUE_FLAG = 'Y' THEN 'SCHEDULE_OVERDUE'

WHEN Q.RUNTIME_REGRESSION_STATUS = 'RUNTIME_REGRESSION' THEN 'RUNTIME_REGRESSION'

WHEN Q.JOB_RISK_SCORE >= NVL(:P_JOB_RISK_SCORE,0.6) THEN 'HIGH_RISK_SCORE'

WHEN Q.SLA_BREACH_PROBABILITY >= NVL(:P_SLA_BREACH_PROBABILITY,0.4) THEN 'SLA_RISK'

END

ELSE NULL

END AS ALERT_REASON,

-- Alert Severity

CASE

WHEN

(

Q.FAILURES_LAST_24_HOURS > 0

OR Q.STUCK_JOB_PROBABILITY >= NVL(:P_STUCK_JOB_PROBABILITY,0.8)

OR Q.SCHEDULE_OVERDUE_FLAG = 'Y'

OR (

Q.CONFIDENCE_LEVEL IN ('MEDIUM','HIGH')

AND (

Q.RUNTIME_REGRESSION_STATUS = 'RUNTIME_REGRESSION'

OR Q.JOB_RISK_SCORE >= NVL(:P_JOB_RISK_SCORE,0.6)

OR Q.SLA_BREACH_PROBABILITY >= NVL(:P_SLA_BREACH_PROBABILITY,0.4)

)

)

)

THEN

CASE

WHEN Q.FAILURES_LAST_24_HOURS > 0 THEN 'CRITICAL'

WHEN Q.STUCK_JOB_PROBABILITY >= NVL(:P_STUCK_JOB_PROBABILITY,0.8) THEN 'CRITICAL'

WHEN Q.SCHEDULE_OVERDUE_FLAG = 'Y' THEN 'HIGH'

WHEN Q.JOB_RISK_SCORE >= 0.75 THEN 'HIGH'

WHEN Q.JOB_RISK_SCORE >= 0.6 THEN 'MEDIUM'

ELSE 'MEDIUM'

END

ELSE 'LOW'

END AS ALERT_SEVERITY,

-- Additional Attribute for Future

'JOB_DEDUPE_KEY' ATTRIBUTE_NAME1,

Q.JOB_DEDUPE_KEY ATTRIBUTE_VAL1,

NULL ATTRIBUTE_NAME2,

NULL ATTRIBUTE_VAL2,

NULL ATTRIBUTE_NAME3,

NULL ATTRIBUTE_VAL3,

NULL ATTRIBUTE_NAME4,

NULL ATTRIBUTE_VAL4,

NULL ATTRIBUTE_NAME5,

NULL ATTRIBUTE_VAL5

FROM

(

SELECT

/* Unique job identifier derived from ESS job name or definition */

J.JOB_KEY AS JOB_IDENTIFIER,

/* Display name of the ESS job */

J.JOB_DISPLAY_NAME AS JOB_NAME,

J.APPLICATION AS APPLICATION,

J.PRODUCT AS PRODUCT,

J.TYPE AS REQUEST_TYPE,

/* Most recent submission time of the job */

J.LAST_RUN_TIME AS LAST_RUN_TIMESTAMP,

J.LAST_RUN_END_TIME AS LAST_RUN_END_TIME,

J.REQUEST_START_TIME AS REQUEST_START_TIME,

-- REQUEST_EVENT_TIME For Log analytics Time Window Incremental Run

J.LAST_RUN_TIME AS REQUEST_EVENT_TIME,

J.REQUEST_COMPLETE_TIME AS REQUEST_COMPLETE_TIME,

J.REQUESTID AS REQUESTID,

J.PARENTREQUESTID AS PARENTREQUESTID,

J.PROCESSGROUP AS PROCESSGROUP,

J.SUBMITTER AS SUBMITTER,

J.SCHEDULED AS SCHEDULED,

J.JOB_DEDUPE_KEY AS JOB_DEDUPE_KEY,

/* Most recent successful completion time */

LS.LAST_SUCCESS_TIME AS LAST_SUCCESS_TIMESTAMP,

/* Runtime (minutes) of the most recent successful run */

ROUND(LS.LAST_RUNTIME_MIN, 2) AS LAST_RUNTIME_MINUTES,

/* Runtime (minutes) of the previous successful run */

ROUND(LS.PREV_RUNTIME_MIN, 2) AS PREVIOUS_RUNTIME_MINUTES,

/* Indicates whether runtime has regressed compared to previous run */

CASE

WHEN LS.PREV_RUNTIME_MIN IS NULL THEN

'INSUFFICIENT_HISTORY'

WHEN JS_HIST.SUCCEEDED_RUNS >= 2 AND LS.PREV_RUNTIME_MIN >= 1

AND LS.LAST_RUNTIME_MIN > LS.PREV_RUNTIME_MIN * P.REGRESSION_FACTOR

THEN

'RUNTIME_REGRESSION'

ELSE

'NORMAL'

END AS RUNTIME_REGRESSION_STATUS,

/* Average runtime over the last N days */

ROUND(RB.AVG_RUNTIME_BASELINE_MIN, 2) AS AVG_RUNTIME_BASELINE_MIN,

/* 95th percentile runtime baseline over the last N days */

ROUND(RB.P95_RUNTIME_BASELINE_MIN, 2) AS P95_RUNTIME_BASELINE_MIN,

/* Number of failed executions in the last 24 hours */

NVL(FC.FAILED_24H_COUNT, 0) AS FAILURES_LAST_24_HOURS,

/* Number of failed executions in the last 7 days */

NVL(FC.FAILED_7D_COUNT, 0) AS FAILURES_LAST_7_DAYS,

/* Count of currently running / blocked / cancelling jobs */

NVL(CR.RUNNING_COUNT, 0) AS CURRENTLY_RUNNING_COUNT,

/* Maximum number of hours a job has been running continuously */

ROUND(NVL(CR.RUNNING_HOURS, 0), 2) AS MAX_RUNNING_DURATION_HOURS,

JS_WIN.TOTAL_RUNS AS TOTAL_RUNS,

JS_WIN.failed_runs AS failed_runs,

JS_WIN.succeeded_runs AS succeeded_runs,

JS_WIN.running_runs AS running_runs,

JS_WIN.blocked_runs AS blocked_runs,

JS_WIN.cancelled_runs AS cancelled_runs,

JS_WIN.warning_runs AS warning_runs,

JS_WIN.queue_pressure AS queue_pressure,

ROUND(JS_HIST.AVG_RUNTIME, 2) AS AVG_RUNTIME_MINUTES,

ROUND(JS_HIST.STDDEV_RUNTIME,2) AS STDDEV_RUNTIME_MINUTES,

/* Bayesian-smoothed probability of failure on the next run */

ROUND((JS_HIST.FAILED_RUNS + 0.5 * JS_HIST.WARNING_RUNS) / (JS_HIST.TOTAL_RUNS), 2) AS PREDICTED_FAILURE_PROBABILITY,

/* Probability that runtime exceeds historical SLA (p95 baseline) */

ROUND(NVL(SP.SLA_BREACH_PROBABILITY, 0), 2) AS SLA_BREACH_PROBABILITY,

/* Runtime volatility index (stddev / mean) */

ROUND((JS_HIST.STDDEV_RUNTIME / NULLIF(JS_HIST.AVG_RUNTIME, 0)), 3) AS RUNTIME_VOLATILITY_INDEX,

/* Trend slope indicating increasing or decreasing runtime over time */

ROUND(TC.RUNTIME_TREND_SLOPE, 3) AS RUNTIME_TREND_SLOPE_PER_RUN,

/* Probability that a currently running job is stuck */

ROUND(NVL(ST.STUCK_PROBABILITY, 0), 3) AS STUCK_JOB_PROBABILITY,

/* Confidence level indicating reliability of job health signals */

CASE

WHEN JS_HIST.SUCCEEDED_RUNS < NVL(:P_MIN_SUCCESS_RUNS,3) THEN 'LOW'

WHEN

(

LEAST(JS_HIST.TOTAL_RUNS / 10, 1) * 0.5

+ (1 - LEAST(JS_HIST.STDDEV_RUNTIME / NULLIF(JS_HIST.AVG_RUNTIME, 0), 1)) * 0.3

+

CASE

WHEN TC.RUNTIME_TREND_SLOPE IS NOT NULL THEN

0.2

ELSE

0

END

) >= 0.75

THEN

'HIGH'

WHEN

(

LEAST(JS_HIST.TOTAL_RUNS / 10, 1) * 0.5

+ (1 - LEAST(JS_HIST.STDDEV_RUNTIME / NULLIF(JS_HIST.AVG_RUNTIME, 0), 1)) * 0.3

+

CASE

WHEN TC.RUNTIME_TREND_SLOPE IS NOT NULL THEN

0.2

ELSE

0

END

) >= 0.40

THEN

'MEDIUM'

ELSE

'LOW'

END AS CONFIDENCE_LEVEL,

ROUND(

LEAST(JS_HIST.TOTAL_RUNS / 10, 1) * 0.5

+ (1 - LEAST(JS_HIST.STDDEV_RUNTIME / NULLIF(JS_HIST.AVG_RUNTIME, 0), 1)) * 0.3